Overview

In this era of rapidly advancing technology, machine-learning models have become essential tools for various industries. However, training these models requires large labeled datasets, which can be expensive and time-consuming.

Active learning is a supervised machine learning approach that aims to optimize annotation using a few small training samples. One of the biggest challenges in building machine learning (ML) models is annotating large datasets. Active learning can help you overcome these challenges.

This documentation serves as a comprehensive guide for users who wish to implement the Active Learning Pipeline using Dataloop's platform.

Active Learning Pipeline in Dataloop (ALP)

The Dataloop's Active Learning Pipeline (ALP) is a powerful and customizable feature designed to automate and streamline the iterative model training process on unstructured data.

Active learning is a machine learning method that selects more informative data points to label, prioritizing those that provide the most valuable information.

By selectively labeling only informative examples, active learning helps the learning process's efficiency and achieves high accuracy with fewer labeled samples.

It automates the active learning workflow, making it accessible to users without a strong technical background.

The pipeline simplifies and accelerates the training of high-quality machine learning models by automating the data ingestion, filtering, annotation task creation, model training, evaluation, and comparison processes.

You can customize the active learning pipeline as per your requirements.

If you are looking for a way to improve your AIOps capabilities, then the Dataloop Active Learning Pipeline is the way to go.

Active Learning Pipeline Flow

Dataloop’s Active Learning Pipeline (ALP) is designed to streamline and optimize the process of training AI models by incorporating human-in-the-loop annotations to enhance the quality of training data. The pipeline is divided into two main flows:

.png)

Ground Truth Enrichment - The upper flow

Training a new version of your model based on the collected data - The lower flow

Upper Flow: Collection of Ground-Truth Data

This flow focuses on collecting and refining ground truth data, which serves as high-quality, labeled data for training models. The collection of ground truth data consists of the following steps:

Trigger The Upper Flow

The ALP’s upper flow can be triggered in two ways:

Event-based trigger: An automatic trigger based on the

item_createdevent is set on the first node (Raw Dataset Node) in the pipeline. This means that whenever a new item is created or synced in the designated dataset, the Active Learning Pipeline is automatically initiated.Configuration: The specific dataset used for this step is set under the Pipeline Variables as the Raw dataset. This can be modified anytime to change the dataset source.

On demand: You can manually trigger the upper flow from the Raw Dataset interface. Here’s how:

1. Open the Dataloop main menu.

2. Go to Data and open the dataset containing your raw data.

3. Browse the dataset to select the specific item(s) to be used.

4. In Dataset Actions, choose Run with Pipeline.

5. Select Active Learning Pipeline from the list of available pipelines.

6. Click Execute. A confirmation message will indicate that the ALP has started.

7. To monitor progress, navigate to the Pipelines page and view the status of the Active Learning Pipeline.Note

Ensure that the Active Learning Pipeline is active before triggering it, as an inactive pipeline will not initiate the ground truth enrichment process.

Annotate New Data

Model Predict Node (YOLOv8): The active learning pipeline continues with a Model Predict node, using YOLOv8, where a pre-trained model generates annotations on the raw data. The selected Model ID for this pre-annotation step is set in the Pipeline Variables under the 'Best model' variable and can be adjusted at any time.

Human in The Loop (HITL) - Human Annotation Tasks: Once the pre-annotation step using your model is done, data items move directly into human annotation/review tasks according to your needs. The default ALP consists of a labeling and review (QA) tasks to correct the model's pre-annotations. Tasks can be customized according to your needs.

Split Data into Ground Truth Subsets

ML Data Split Node: The ML Data Split node randomly splits the annotated data into three subset groups based on the given distribution percentage. By default, the distribution is 80-10-10 for Train-Validation-Test subsets. Metadata tags will be added to the items' metadata under

metadata.system.tag., for example:{ "metadata": { "system": { "tags": { "train": true } } } }

These metadata tags are to be later easily found and used as DQL filters for the training and evaluation.

Ground Truth - Dataset Node: The Ground Truth dataset stores the Ground Truth data that will be used to train the model. The data will be cloned from the Raw Data dataset that was selected in the first pipeline node. The selected Dataset ID for the ground truth step collection is set in the Pipeline Variables under the ‘Ground Truth dataset’ variable and can be adjusted at any time.

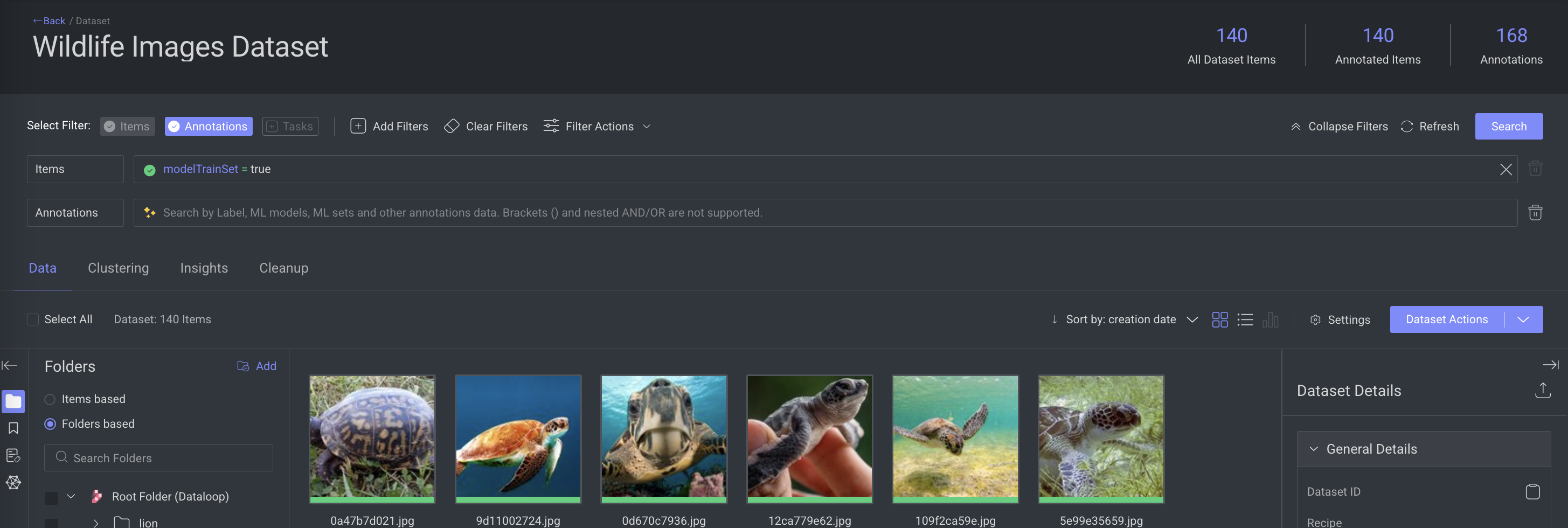

You can use the Dataset Browser at any time to see the latest ML subset divisions, using the smart search to filter items based on items’ subsets, such as ModelTestSet, ModelTrainSet, and ModelValidationSet.

Splitting data into separate folders in Ground-Truth dataset:

To distribute data into distinct folders within the Ground-Truth dataset, use three Dataset Nodes for the ground truth step instead of one, each linked to a different output port of the ML Data Split node.

Lower Flow: Create, Train, Deploy New Model Version

Creating and training new models using ground truth data consists of the following steps:

Trigger New Model Version

Create a New Model: The first step (Create New Model node) clones the base YOLOv8 model using the Pipeline Variables set on the node inputs (base model, ground truth dataset, training subset, validation subset, and model configuration). The node outputs a model that is prepared for training. The name of the newly created model is taken from the text box in the node configuration panel. If a model already exists with the same name, an incremental number will be automatically added as a suffix.

Trigger the lower flow:

Cron Trigger (recommended): Initiate the creation and training of a new model version using a scheduled cron trigger (e.g., running the process once a week).

Manual Trigger with SDK: To execute the pipeline manually, use the following SDK snippet. Provide the node ID of the node (e.g., the 'Create New Model' node) as input.

This will override and remove any existing cron trigger set on the first node, allowing the lower flow to begin execution.

It allows the execution of any flow within the pipeline, not limited to flows that begin with the designated 'start node'.

The flow will handle tasks such as creating and training a new model version.

p = dl.pipelines.get(pipeline_id='pipeline_id') pipeline.execute(execution_input={}, node_id="") # input of the node, node_id='node_id')To copy the Node ID: In the Pipelines page, click on the Node -> Click Actions -> Select Copy Node ID from the list.

To remove Cron Trigger from UI: In the Pipelines page, click on the Node -> In the right-panel, disable (toggle button) the Trigger (optional) option.

Event-Based or Custom Logic Trigger: Use an event trigger or add your custom logic within a code node to initiate the pipeline. For instance, you can design the trigger to activate the flow based on specific conditions, such as the occurrence of a certain number of Dataloop events.

Train & Evaluate

Train Model: The Train Model node executes the training process for the newly created model version. The model will be trained over the Ground-Truth dataset using the train and validation subsets that were saved on the model during its creation.

Evaluate Node - New Model: The Evaluate Model node creates evaluation for the newly created model based on the entire test subset of the Ground-Truth dataset (as defined in the variables). The evaluation will be used later for comparison with the base model evaluation (as defined in the 'Best model' variable).

To evaluate a model, a test subset with ground truth annotations will be compared to the predictions made during the evaluation process.

The model Will create predictions on the test items, and scores will be given based on the annotation types. Currently, supported types for scoring include classification, bounding boxes, polygons, segmentation, and point annotations.

By default, the annotation type(s) to be compared are defined by the model output type. Scores include a label agreement score, an attribute agreement score, and a geometry score (for example, IOU). Scores will be uploaded to the platform and available for other uses (for example, comparing models).

Evaluate Node - Base Model: The Evaluate Model node creates evaluation for the base model to make sure its evaluation is up-to-date, and based on the entire test subset of the Ground-Truth dataset (as defined in the variables). The evaluation will be used later for comparison with the newly created model.

Compare Models & Deploy the Best Version

Compare Models: The Compare Models node compares two models: a previously trained model and a newly trained model. The default Dataloop comparison node can compare any two models that have either:

Uploaded metrics to model management during model training, or evaluated on a common test subset.

The Compare Models node uses the Comparison Config variable.

Update Variable (‘Best Model’): If the winning model is the new model (based on the comparison config), the Update Variable node will automatically deploy the model and update the Model ID in the 'Best model' variable. Your pipeline will start using the new model immediately across the pipeline - every node that uses this variable (predict, create model).

Access Active Learning Pipeline

To access and manage the Active Learning Pipeline (ALP) in Dataloop, specific permissions are required to ensure that only authorized users can view, edit, and control pipeline operations. Here’s an outline of the permission requirements:

Roles | Permissions |

|---|---|

Project Owner | View and Edit |

Developer | View and Edit |

Annotation Manager | View only |

To access Active Learning Pipeline:

Go to the Marketplace -> Pipelines tab.

Select the Active Learning Template.

Click Install. The ALP template will be installed and available in the Pipelines page.

Configure Your Active Learning Pipeline Nodes

Dataset Node - Raw Data

Click on the Raw Dataset Node.

(Optional) Update the node name.

Set a fixed dataset from the list or set a variable.

(Optional) Uncheck if you do not want.

Configure the pipeline variables and nodes according to your specific requirements.

Manage Your Pipeline Variables

To control your pipeline in real-time, the Active Learning template provides pipeline variables, enabling you to execute the pipeline with your preferred base model, dataset, configurations, and other specifications.

To customize the variables based on your requirements:

From the top, click on the Pipeline Variables icon.

Click Manage Variables.

Identify variables and click the Edit icon to set values according to your needs. For more information on variables, read below:

Active Learning Variables

Here are the variables managed within the active learning:

Raw Dataset (Dataset): The Raw Dataset is where new, unlabeled data is uploaded for processing. The active learning pipeline uses this dataset as the starting point for generating predictions, which are later corrected by annotators to create the Ground Truth Dataset. Set the Dataset ID of your raw data.

To copy the Dataset ID: Go to Dataloop main menu -> click Data -> Identify the Dataset and click More Actions (three dots) -> Select Copy Dataset ID from the list.

Best model (Model): This is the trained or baseline model version you want to use to start your active learning pipeline. It serves as the initial model for selecting the most uncertain or valuable samples. Set the model ID of the trained or baseline model version you want to start your active learning pipeline with.

To copy the Model ID: Dataloop main menu -> click Models -> Versions tab -> More actions (three dots) -> Copy Model ID.

Note

The model must be located within your project scope. You can import any public model provided to your active project from the Model Management page.

Ground Truth Dataset (Dataset): It represents a dataset containing labeled and verified data, which serves as the truth or reference standard for training and validating your model. The quality of the ground truth data directly impacts the performance of your model and ensures reliable evaluation during the active learning cycle. Set the Dataset ID of your ground Truth Dataset.

To copy the Dataset ID: Go to Dataloop main menu -> click Data -> Identify the Dataset and click More Actions (three dots) -> Select Copy Dataset ID from the list.

Train/Validation Subset Filters (JSON): Set a DQL filter to retrieve Train/Validation set items from the Ground Truth Dataset for model training. We recommend using the default filter, which targets items marked with the train/validation metadata tag added by ML Data Split node subsets.

Test Subset Filter (JSON): Set a DQL filter to retrieve Test set items from the Ground Truth Dataset for model evaluation. We recommend using the default filter, which targets items marked with the test metadata tag added by ML Data Split node subsets.

Model Configuration (JSON): Set the configuration for new models (for the 'Create New Model' node). The configuration will be used for both training and prediction. If no value is provided (empty JSON '{}'), then the base model's configuration will be applied.

Model Comparison Configuration (JSON): Set the comparison config for the models' comparison (new model vs. best model) based on the evaluation results (precision-recall).

For more details, read here: GitHub.

Customizing an Active Learning Pipeline

Modify the pipeline composition using drag & drop to add custom processing steps or rearrange the existing nodes.

Configurable Logic: Every step and logic within the pipeline is fully configurable. You can update the pipeline composition with simple drag-and-drop actions.

Use the Code node to incorporate your code to introduce custom processing steps within the pipeline. For example:

Filter data for the Annotation tasks (after inferencing).

Trigger the model creation & train flow based on event counts.

Nonrandom ML data split.

Executing a Pipeline

Once you configure your Active Learning Pipeline, click Start Pipeline to activate it. Any new event triggers will execute the pipeline.

To manually trigger the pipeline over existing data, use the SDK or:

Go to the Browser of the Dataset you set in your task nodes.

Filter the required data.

Click Dataset Actions -> Run with pipeline -> Select your pipeline -> Click Execute.