Overview

After training a model, you can evaluate the quality and accuracy of the new model using Dataloop's Models tool.

The Model Management provides model evaluation metrics to help you determine the performance of your models, such as precision and recall metrics. It calculates the evaluation metrics by using any specified test set.

Evaluating a model version in the Dataloop system creates a dedicated application service that uses your data to create an evaluation execution.

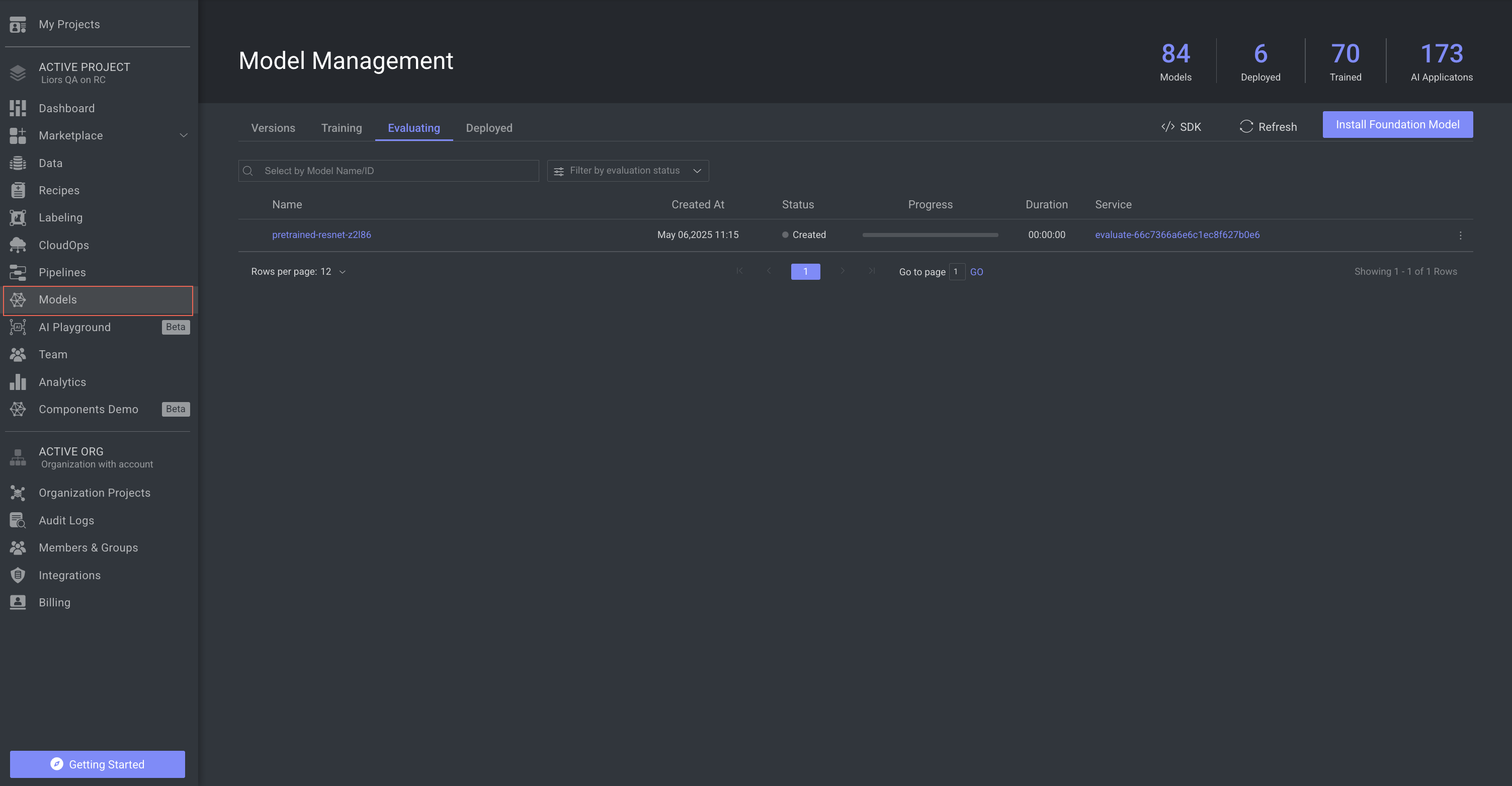

Evaluating Table Details

The list of models and its details are available in the following table:

| Fields | Description |

|---|---|

| Name | The name of the model. Click on it to open the model's detailed page. |

| Created At | The timestamp of the model's evaluation process creation. |

| Status | The status of the model evaluation. To learn more, refer to the Models Evaluation Status section. |

| Progress | The progress bar of the model evaluation process. |

| Duration | The time duration taken for the evaluation process. |

| Service | The name of the evaluation service. Click on it to open the service page. |

Search and filter evaluating models

Dataloop platform allows you to search models that are in evaluation using Model Name and Model ID, providing users with the capability to refine and narrow down the displayed models.

Use the Search field to search and filter the models.

Filter models by evaluating status

Dataloop platform allows you to search models that are in evaluation by Status. Use the Filter by Training Status field to filter models by status. To learn more, refer to the Models Evaluating Status section.

Models Evaluating Status

A model evaluation status is indicated in the evaluation table. The status on a model version can be one of the following:

- Created: The model evaluation process has been created.

- In Progress: The model evaluation process has been started and in progress.

- Success: The model evaluation has been successfully completed.

- Failed: Model evaluation failed. This could be because of a problem with the model adaptor, the evaluation method, configuration or compute resources issues. Refer to logs for more information.

- Aborted: The execution process of the model evaluation has been aborted. Your model's training stopped unexpectedly, maybe due to an unforeseen issue.

- Terminated: The model evaluation has been terminated. User intentionally ended the model's evaluation.

- Rerun: The model evaluation status is at rerun. It might need to rerun the evaluation process.

- Pending: The model version is pending evaluation. Evaluation will begin when there are available resources on the evaluation service (begins with 'mgmt-train' and ends with the model-id of the respective model-architecture.

Evaluation Service & Resources

For every public Model-Architecture, there's a matching training service with the respective configuration. This service is then used to train all model-versions derived from this architecture. For example - all ResNet based version are trained using the same service.

The service is created upon the first installation - it is not pre-installed in a project, therefore you won't be able to see or configure it until you start your first training process.

The training service naming convention is "mgmt-train"+model-ID, when the model-ID is the model-architecture ID (e.g. ResNet, YoloV5, etc.).

Any private model added to the Dataloop platform must facilitate a Train function that performs the training process. When training a model, a Dataloop-service will be started with the model, using its training function.

As any service in Dataloop's application, it has its compute settings. 2 Settings worth mentioning here are:

-

Instance type - by default, all training services use GPU instances.

-

Auto-scaling - set to 1. Increase the auto-scaler parameter if you intend to simultaneously train multiple snapshots.

Evaluating Metrics

While training is in process, metrics are recorded and can be viewed from the Explore tab. To learn more, refer to the Training Metrics article.

Copy Evaluating Model ID

- Go to the Models page from the left-side menu.

- Select the Evaluation tab.

- Find the model to copy the ID.

- Click on the three-dots and select the Copy Model ID from the list.

Copy Execution ID

To copy execution ID of a model in evaluating:

- Go to the Models page from the left-side menu.

- Select the Evaluation tab.

- Find the model to copy the execution ID.

- Click on the three-dots and select the Copy Execution ID from the list.

Abort the Model Evaluating Process

- Go to the Models page from the left-side menu.

- Select the Evaluation tab.

- Find the model to abort the evaluation process.

- Click on the three-dots and select the Abort Evaluation from the list.

Error and warning indications

When a service encounters errors, such as Crashloop, ImagePullBackOff, OOM, etc., or when the service is paused, pending executions will get stuck in the queue (in "created" status). You can click or hover over the error or warning icon to view the details.

- An error icon is displayed when a service fails to start running. The service link is provided, and you click on it to view the respective executions tab of the service. The pending evaluation execution status will be Created, In progress, or Rerun. For example,

- A warning icon is displayed when a service is inactive. The service link is provided, and you click on it to view the respective service page. The pending evaluation execution status will be Created or Rerun. For example,