Overview

Training a model version in the Dataloop system creates a dedicated training pipeline that injects data into a training service.

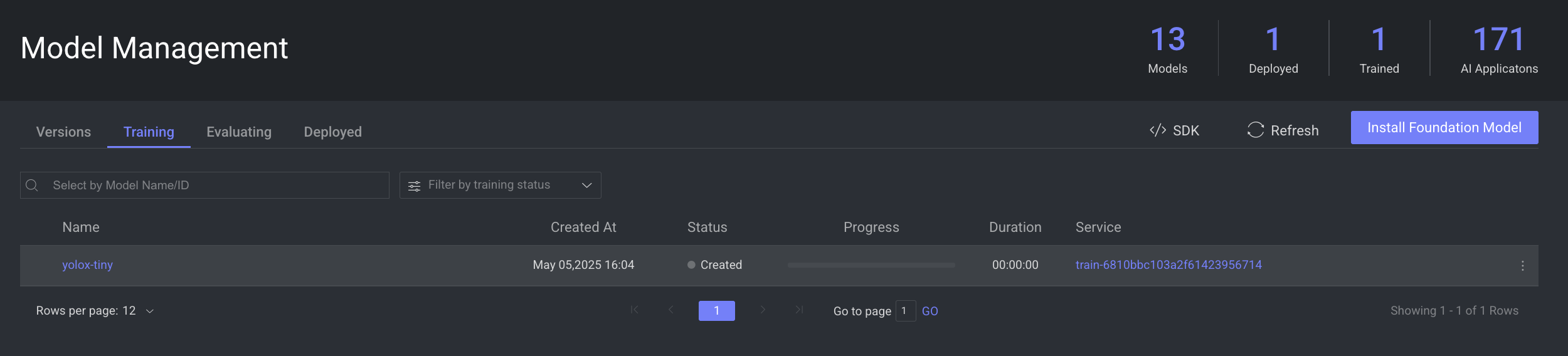

Training Table Details

The list of models and its details are available in the following table:

| Fields | Description |

|---|---|

| Name | The name of the model. Click on it to open the model's detailed page. |

| Created At | The timestamp of the model's training process creation. |

| Status | The status of the model training. To learn more, refer to the Models Training Status section. |

| Progress | The progress bar of the model training process. |

| Duration | The time duration taken for the training process. |

| Service | The name of the training service. Click on it to open the service page. |

Search and Filter Training Models

Dataloop platform allows you to search models that are in training using Model Name and Model ID, providing users with the capability to refine and narrow down the displayed models.

Use the Search field to search and filter the models.

Filter Models by Training Status

Dataloop platform allows you to search models that are in training by Status. Use the Filter by Training Status field to filter models by status. To learn more, refer to the Models Training Status section.

Model Training Status

A model training status is indicated in the training table. The status on a model version can be one of the following:

- Created: The model training process has been created.

- In Progress: The model training process has been started and in progress.

- Success: The model training has been successfully completed.

- Failed: Model training failed. This could be because of a problem with the model adaptor, the training method, configuration or compute resources issues. Refer to logs for more information.

- Aborted: The execution process of the model training has been aborted. Your model's training stopped unexpectedly, maybe due to an unforeseen issue.

- Terminated: The model training has been terminated. User intentionally ended the model's training.

- Rerun: The model training status is at rerun. It might need to rerun the training process.

- Pending: The model version is pending training. Training will begin when there are available resources on the training service (begins with 'mgmt-train' and ends with the model-id of the respective model-architecture. For example, a single service handles training for all ResNet model versions, etc.). Increase the auto-scaling factor to enable immediate training.

Training Service & Resources

For every public Model-Architecture, there's a matching training service with the respective configuration. This service is then used to train all model-versions derived from this architecture. For example - all ResNet based version are trained using the same service.

The service is created upon the first installation - it is not pre-installed in a project, therefore you won't be able to see or configure it until you start your first training process.

The training service naming convention is "mgmt-train"+model-ID, when the model-ID is the model-architecture ID (e.g. ResNet, YoloV5 etc.).

Any private model added to the Dataloop platform must facilitate a Train function that performs the training process. When training a model, a Dataloop-service will be started with the model, using its Training function.

As any service in Dataloop's application, it has its compute settings. 2 Settings worth mentioning here are:

-

Instance type: By default, all training services use GPU instances

-

Auto-scaling: Set to 1. Increase the auto-scaler parameter if you intend to simultaneously train multiple snapshots.

Training Metrics

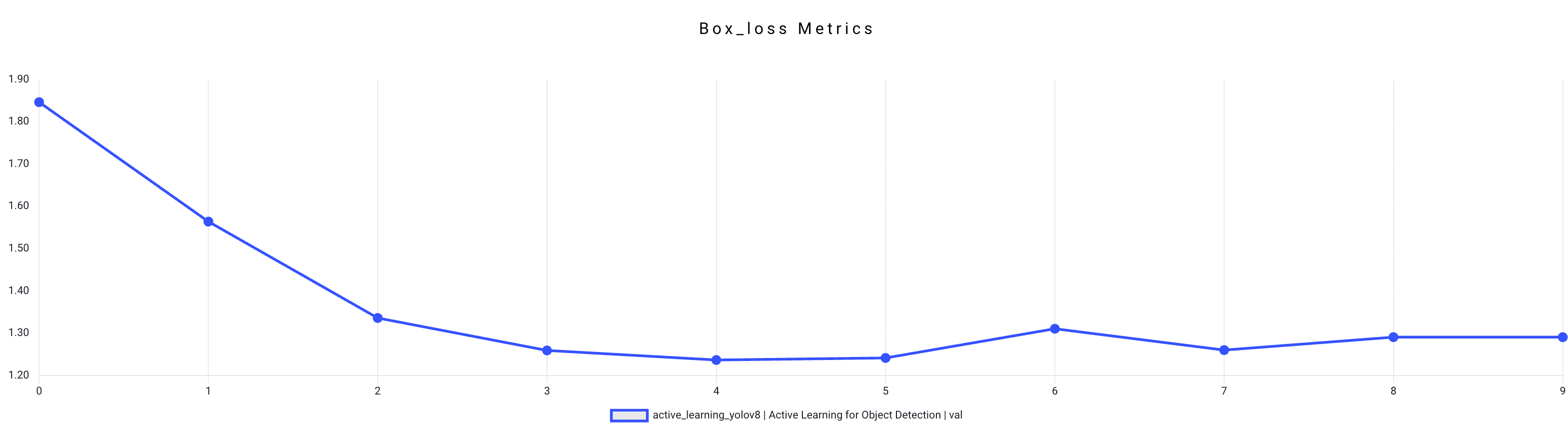

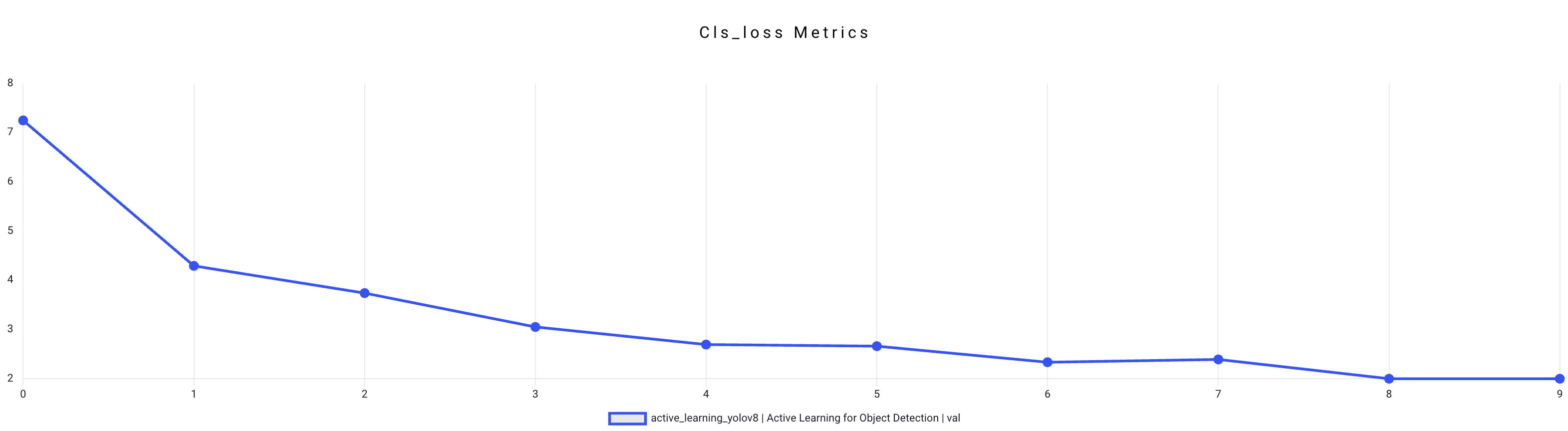

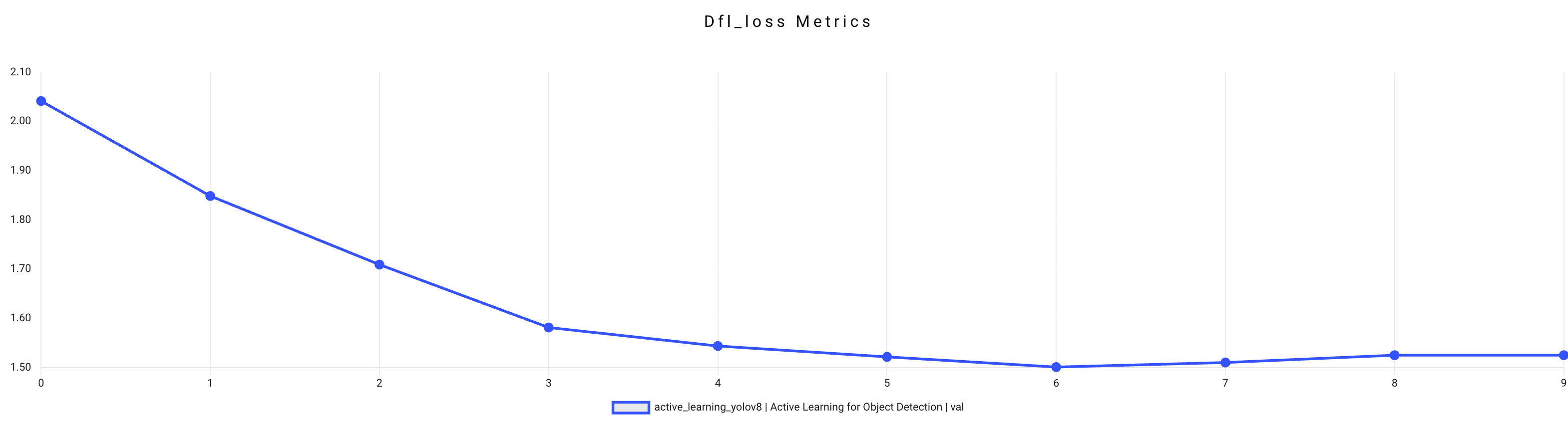

While training is in process, the following metrics are recorded and can be viewed from the Explore tab.

- X-axis: The x-axis usually represents different iterations or epochs during the training process. Each point on the x-axis corresponds to a specific point in time during the training, where the model updates its parameters based on a batch of training data. It provides a timeline of the training progress.

- Y-axis: The y-axis typically represents the value of the box loss metric at each iteration or epoch. The box loss metric quantifies the difference between the predicted bounding box and the ground truth bounding box. Lower values on the y-axis indicate lower loss, meaning that the predicted bounding boxes are closer to the ground truth bounding boxes.

Object Detection Loss

In the context of training an object detection model, loss functions play a critical role. Loss functions quantify the discrepancy between the predicted bounding boxes and the ground truth annotations, providing a measure of how well the model is learning during training. Common loss components in object detection include:

Box Loss Metrics

Box loss measures the error in predicting the coordinates of bounding boxes. It encourages the model to adjust the predicted bounding boxes to align with the ground truth boxes.

Cls Loss Metrics

Class loss quantifies the error in predicting the object class for each bounding box. It ensures that the model accurately identifies the object's category.

Dfl Loss Metrics

Distribution Focal Loss is a specialized loss component that helps improve object detection in scenarios with defocused or blurry images. It encourages the model to focus on improving the detection in such challenging conditions.

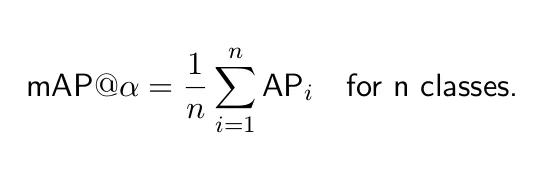

Mean Average Precision (mAP)

Mean Average Precision (mAP) is a crucial metric in object detection that evaluates model performance by considering both precision and recall across multiple object classes.

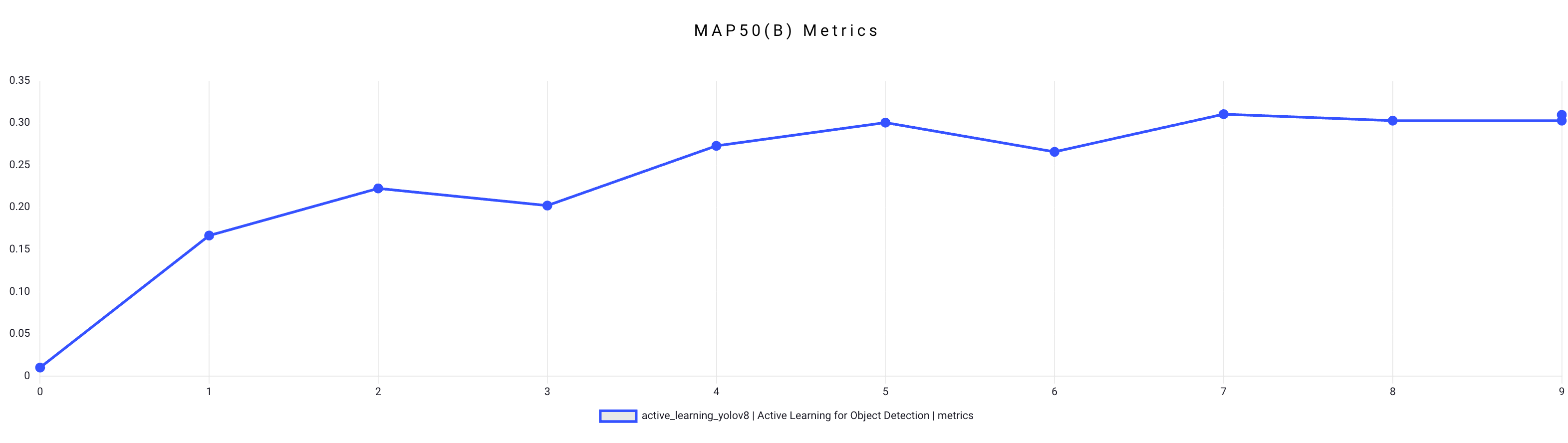

Map50 (b) Metrics

Mean Average Precision (mAP) is a crucial metric in object detection that evaluates model performance by considering both precision and recall across multiple object classes. Specifically, mAP50 focuses on an Intersection over Union (IoU) threshold of 0.5, measuring how well a model identifies objects with reasonable overlap. Higher mAP50 scores indicate superior overall performance.

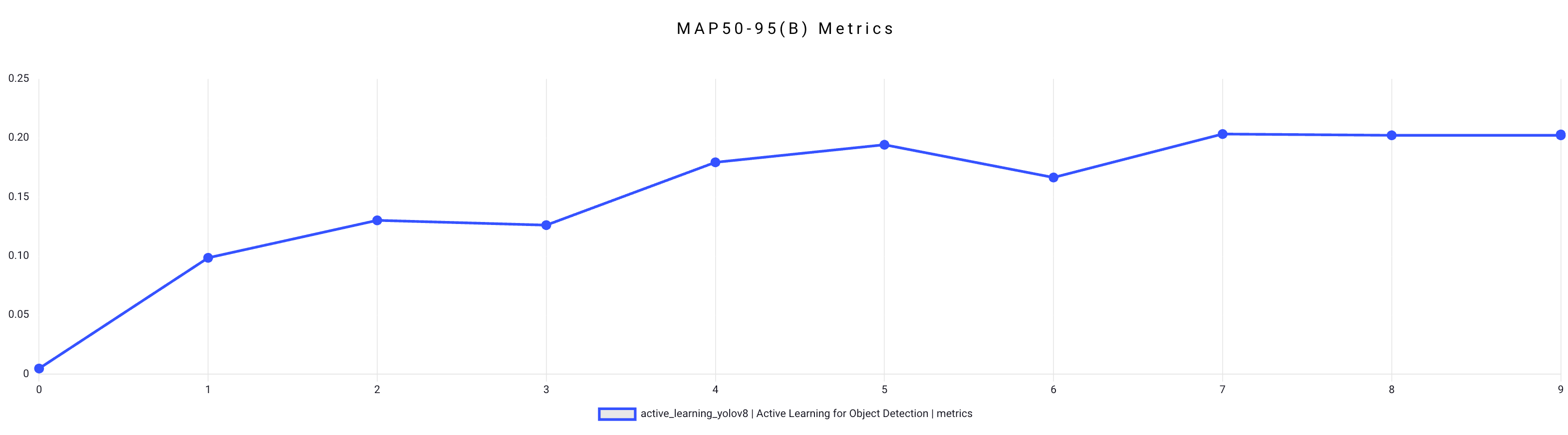

Map50-95 (b) Metrics

To provide a more comprehensive assessment, mAP50-95 extends the evaluation to a range of IoU thresholds from 0.5 to 0.95. This metric is especially valuable for tasks requiring precise localization and fine-grained object detection.

In practice, mAP50 and mAP50-95 help assess model performance across different classes and conditions, offering insights into object detection accuracy while considering the precision-recall trade-off. Models with higher mAP50 and mAP50-95 scores are more reliable and suitable for demanding applications like autonomous driving and security surveillance.

Precision and Recall

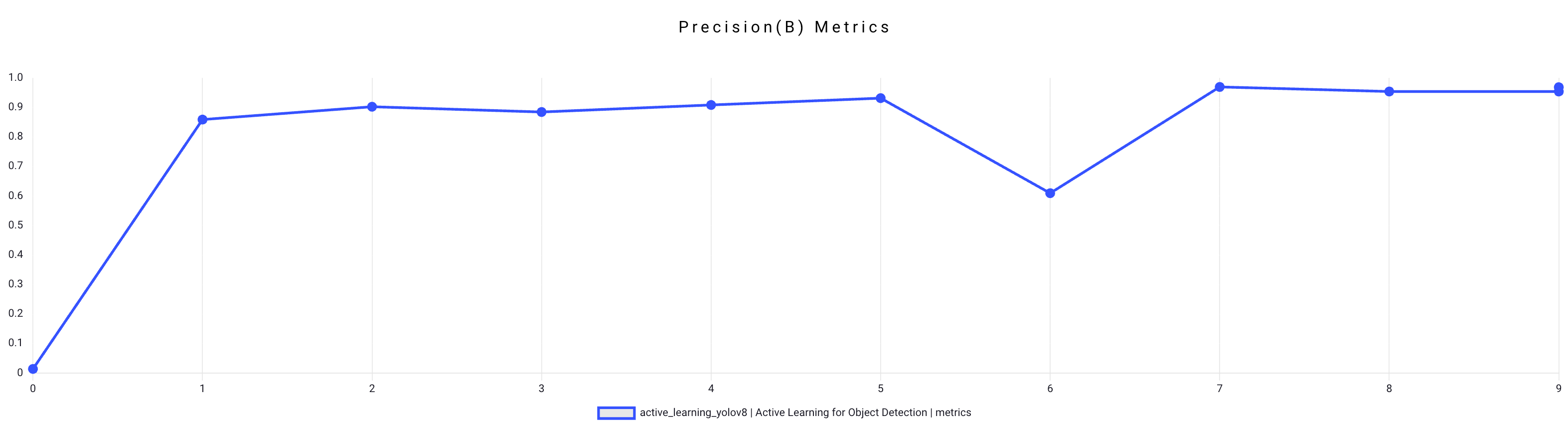

A model is said to be good if it has high precision and high recall. A perfect model has zero FNs and zero FPs (precision=1 and recall=1). Often, attaining a perfect model is not feasible.

Definition of terms:

- True Positive (TP) — Correct detection made by the model.

- False Positive (FP) — Incorrect detection made by the detector.

- False Negative (FN) — A Ground-truth missed (not detected) by the object detector.

- True Negative (TN) —This is the background region correctly not detected by the model. This metric is not used in object detection because such regions are not explicitly annotated when preparing the annotations.

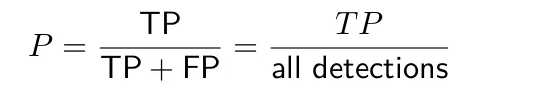

Precision (b) Metrics

Precision is the degree of exactness of the model in identifying only relevant objects. It is the ratio of TPs over all detections made by the model.

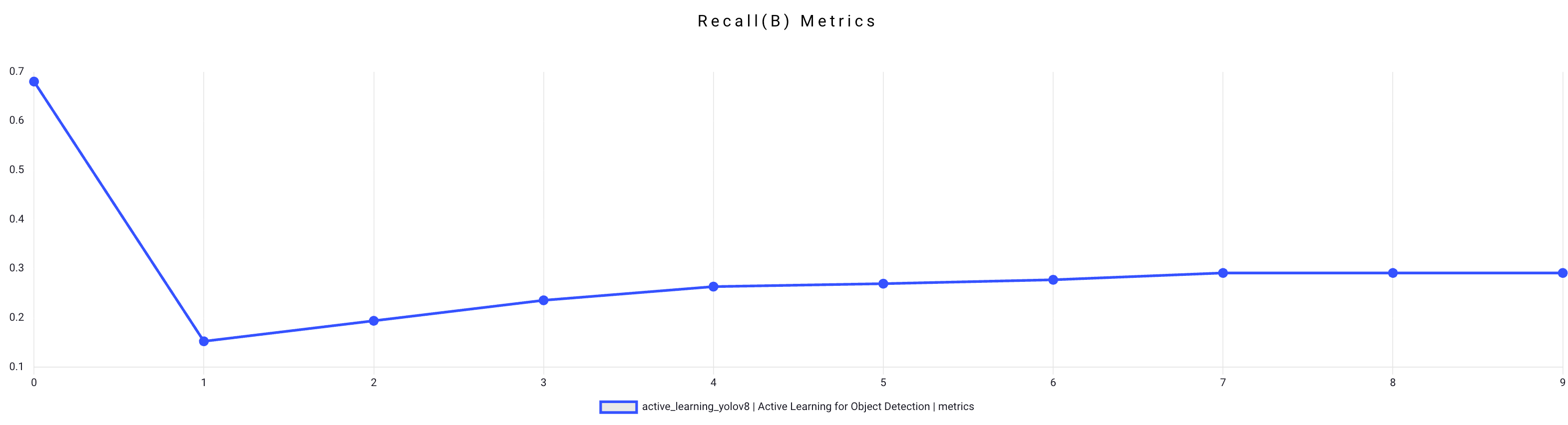

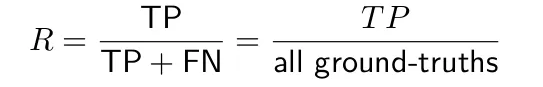

Recall (b) Metrics

Recall measures the ability of the model to detect all ground truths— proposition of TPs among all ground truths.

Copy a Training Model ID

- Go to the Models page from the left-side menu.

- Select the Training tab.

- Find the model to copy the ID.

- Click on the three-dots and select the Copy Model ID from the list.

Copy Execution ID

To copy execution ID of a training model:

- Go to the Models page from the left-side menu.

- Select the Training tab.

- Find the model to copy the execution ID.

- Click on the three-dots and select the Copy Execution ID from the list.

Abort the Model Training Process

- Go to the Models page from the left-side menu.

- Select the Training tab.

- Find the model to abort the training process.

- Click on the three-dots and select the Abort Training from the list.

Remove a Model from the Training Process

- Go to the Models page from the left-side menu.

- Select the Training tab.

- Find the model to remove it from the training process.

- Click on the three-dots and select the Remove Training from the list.

Error and Warning Indications

When a service encounters errors, such as Crashloop, ImagePullBackOff, OOM, etc., or when the service is paused, pending executions will get stuck in the queue (in "created" status). You can click or hover over the error or warning icon to view the details.

- An error icon is displayed when a service fails to start running. The service link is provided, and you can click on it to view the respective executions tab of the service. The pending training execution status will be Created, In progress or Rerun.

- A warning icon is displayed when a service is inactive. The service link is provided, and you can click on it to view the respective executions tab of the service. The pending training execution status will be Created or Rerun.