What is a Recipe?

In Dataloop, a Recipe is the core configuration that defines how annotation, evaluation, or review tasks are performed. It establishes the workflow, tools, labels, and interface required to process a specific data type or AI task.

Recipes serve as a blueprint for:

How annotators or reviewers interact with the data

Which tools and labels are available

How outputs are structured and validated

How consistency and quality are maintained across tasks

By standardizing labeling workflows, Recipes help improve annotation quality and accelerate AI and machine learning development. The type of Recipe you create depends on the task context (annotation or evaluation) and the data modality, such as image, video, text, audio, or multimodal data.

Ontology

Contains the labels (classes) and attributes used in the project. The dataset ontology is the building block of a model and helps define the information applied to data to represent the knowledge models that are then trained to inference.

Labels (like classes) are the names used for classifying annotations.

Attributes allow additional independent degrees of freedom to define labels.

Recipe and Dataset Association

Every Dataset is linked to a single Recipe by default.

During dataset creation, users can:

Create a new Recipe (with the same name as the dataset), or

Link an existing Recipe to the new dataset.

This linkage ensures that the dataset has predefined labeling instructions from the outset.

Recipe and Task Configuration

Annotation and QA tasks derive their configuration from the linked Recipe.

By default, a task uses the recipe associated with the dataset from which its items originate.

However, users can override the default recipe during task creation or editing:

This enables the same data item to be annotated or reviewed under multiple recipes, each with distinct taxonomies or label structures.

Useful for multi-purpose evaluations, A/B workflows, or cross-domain annotation strategies.

Working with Recipes via SDK

Dataloop’s SDK allows developers to programmatically create, manage, and associate recipes within their pipelines.

You can define ontologies, attributes, tools, and task instructions via code.

Useful for automating dataset onboarding or syncing recipe configurations across projects.

To learn more, visit the Developers Guide on Recipes.

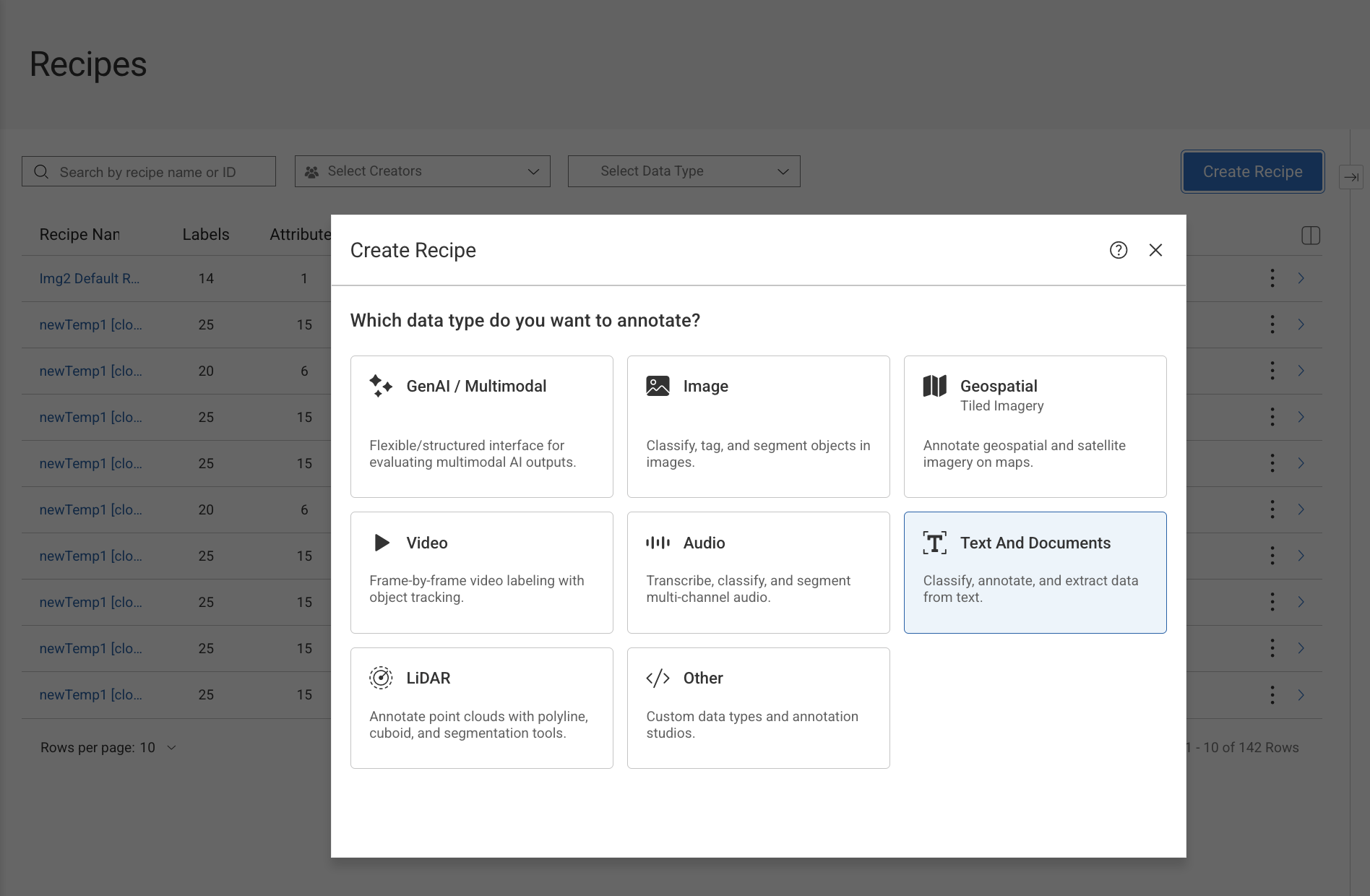

Recipe Types by Data and Task

The type of recipe you create depends on the context of the task (annotation vs. evaluation) and the data modality (image, video, text, audio, etc.).

1. GenAI / Multimodal

Purpose: Evaluation and review of multimodal AI outputs.

This recipe type provides a flexible or structured interface for evaluating outputs generated by Generative AI models. It supports single or multiple modalities such as text, images, audio, video, or combinations of these within a single task. Instead of labeling raw data, users assess model-generated responses against defined criteria, enabling consistent and scalable human feedback.

It is especially useful for human-in-the-loop evaluation, quality assurance, and model comparison, where subjective judgment and structured scoring are required.

Common use cases include:

LLM response evaluation and ranking

Image–text alignment and relevance checks

Human feedback collection and scoring

Structured GenAI benchmarking and model comparison

This recipe is best suited for AI output evaluation and validation, rather than traditional data annotation workflows.

Start creating

2. Image

Purpose: Annotation of static image data.

The Image recipe is designed for labeling and annotating still images used in computer vision tasks. It provides intuitive visual tools that allow annotators to accurately identify objects, regions, or attributes within an image.

This recipe supports both simple classification tasks and advanced pixel-level annotations, making it suitable for a wide range of vision-based AI applications.

Common tasks include:

Image classification and tagging

Object detection using bounding boxes or polygons

Semantic and instance segmentation

These annotations are commonly used to train and validate computer vision models in domains such as retail, healthcare, manufacturing, and security.

Start creating

3. Geospatial (Tiled Imagery)

Purpose: Annotation of large-scale, map-based imagery.

This recipe is tailored for geospatial datasets where images are too large to be handled as single files and are instead rendered as map tiles. It enables location-aware annotation with precise geographic context, allowing users to zoom, pan, and annotate accurately across vast areas.

Annotations are tied to real-world coordinates, making this recipe essential for spatial analysis tasks.

Common domains include:

Satellite imagery analysis

Aerial and drone photography

GIS, mapping, and land-use applications

It is widely used in environmental monitoring, urban planning, agriculture, and defense use cases.

Start creating

4. Video

Purpose: Annotation of temporal visual data.

The Video recipe supports annotation of data where time and motion are critical components. It allows annotators to work frame by frame while maintaining continuity across the video timeline.

Advanced features such as object tracking help reduce repetitive work and improve annotation consistency over long sequences.

Key capabilities include:

Frame-by-frame labeling

Object tracking across frames

Temporal event, action, and behavior annotation

This recipe is ideal for applications like autonomous driving, surveillance, sports analysis, and activity recognition.

Start creating

5. Audio

Purpose: Processing and annotation of audio signals.

The Audio recipe is designed for working with sound-based data, enabling precise annotation along a time axis. Users can label, segment, and transcribe audio with fine-grained control over timestamps.

It supports both single-channel and multi-channel audio, making it suitable for complex audio analysis scenarios.

Supported tasks include:

Speech transcription

Audio classification (e.g., noise, music, speaker type)

Time-based audio segmentation

Multi-channel audio workflows

Common use cases include speech recognition systems, call center analytics, and acoustic event detection.

Start creating

6. Text and Documents

Purpose: Natural Language Processing and document understanding.

This recipe enables annotation and extraction tasks for textual data, ranging from simple text classification to complex document parsing. It supports both raw text and formatted documents, including those processed with OCR.

Users can define structured outputs to convert unstructured text into machine-readable data.

Typical tasks include:

Text classification and categorization

Entity and span annotation (NER)

Structured data extraction from documents

It is commonly used for NLP models, document AI, and enterprise automation workflows.

Start creating

7. LiDAR

Purpose: Annotation of 3D point cloud data.

The LiDAR recipe is designed for three-dimensional data captured by sensors such as LiDAR scanners. It provides specialized tools that allow annotators to label objects in 3D space with high spatial accuracy.

Annotations reflect real-world dimensions and spatial relationships, which are critical for downstream modeling.

Supported tools and tasks include:

Cuboid and polyline annotation

3D segmentation

Spatial object labeling in point clouds

This recipe is primarily used in autonomous driving, robotics, mapping, and simulation environments.

Start creating

8. Other

Purpose: Custom or non-standard data types.

The Other recipe category is intended for use cases that do not fit into standard data modalities. It allows teams to build custom annotation studios and workflows tailored to specialized or experimental data formats.

This flexibility makes it possible to support proprietary data types, emerging modalities, or highly customized task requirements without being constrained by predefined templates.

Start creating

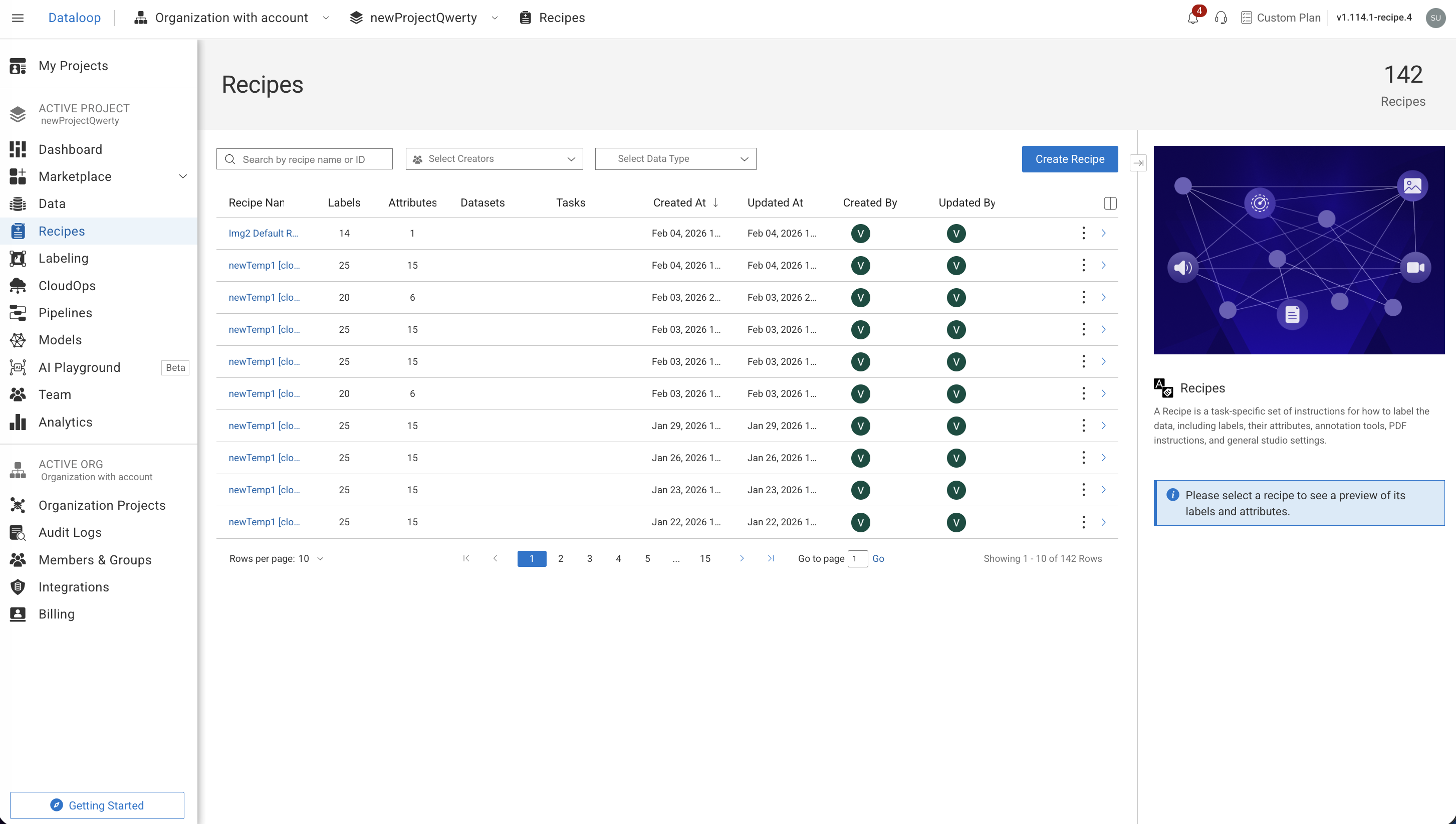

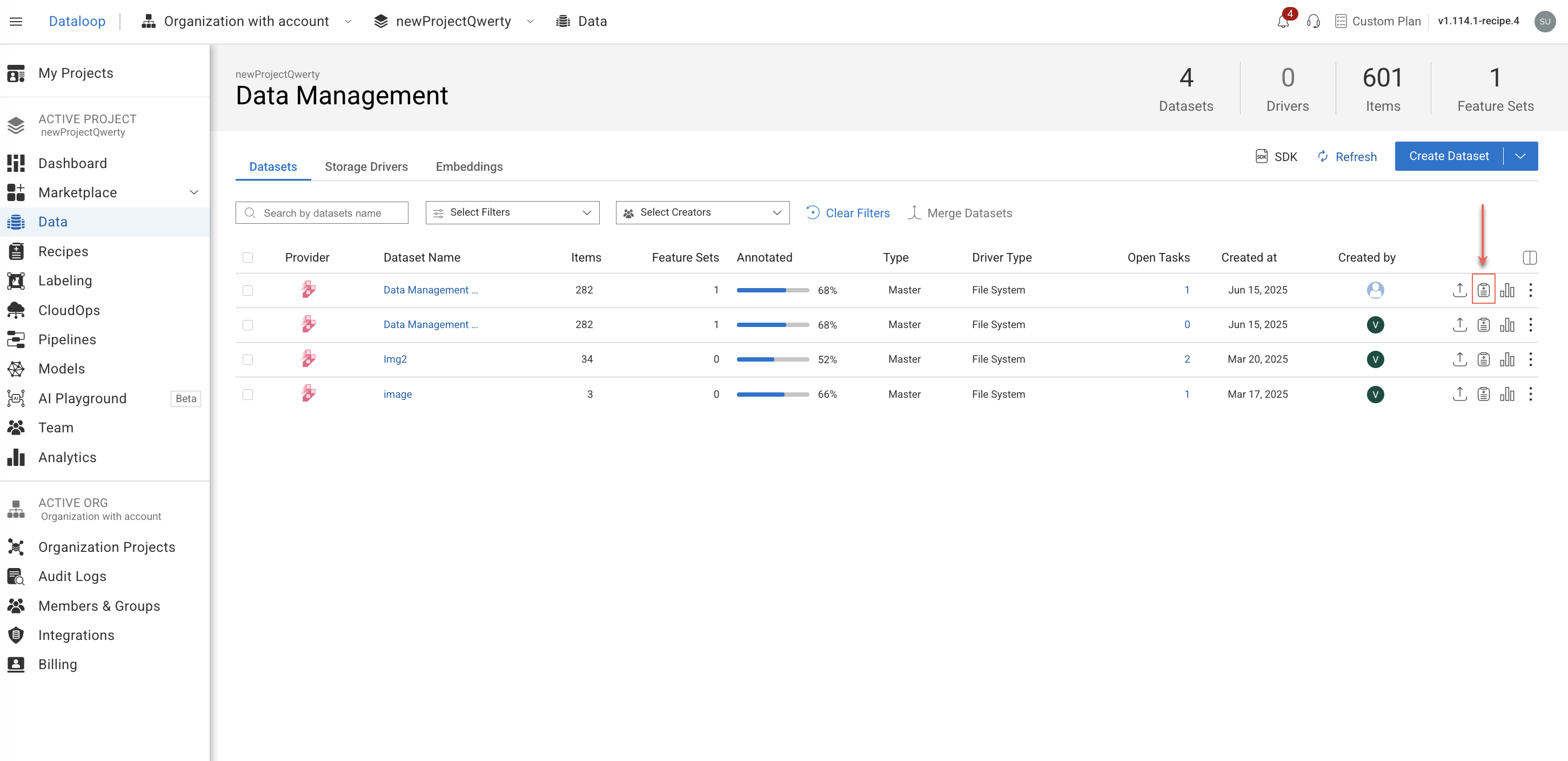

Access Recipes

To open the recipe page for a specific Dataset, use one of the following options:

From the Dashboard → Data Management table:

Find the Dataset from the list.

Click on the Recipe icon to view recipe details.

From the Recipes menu:

Click on the Recipes from the lift-side menu.

Locate/search for the recipe from the list.

Click on the recipe.

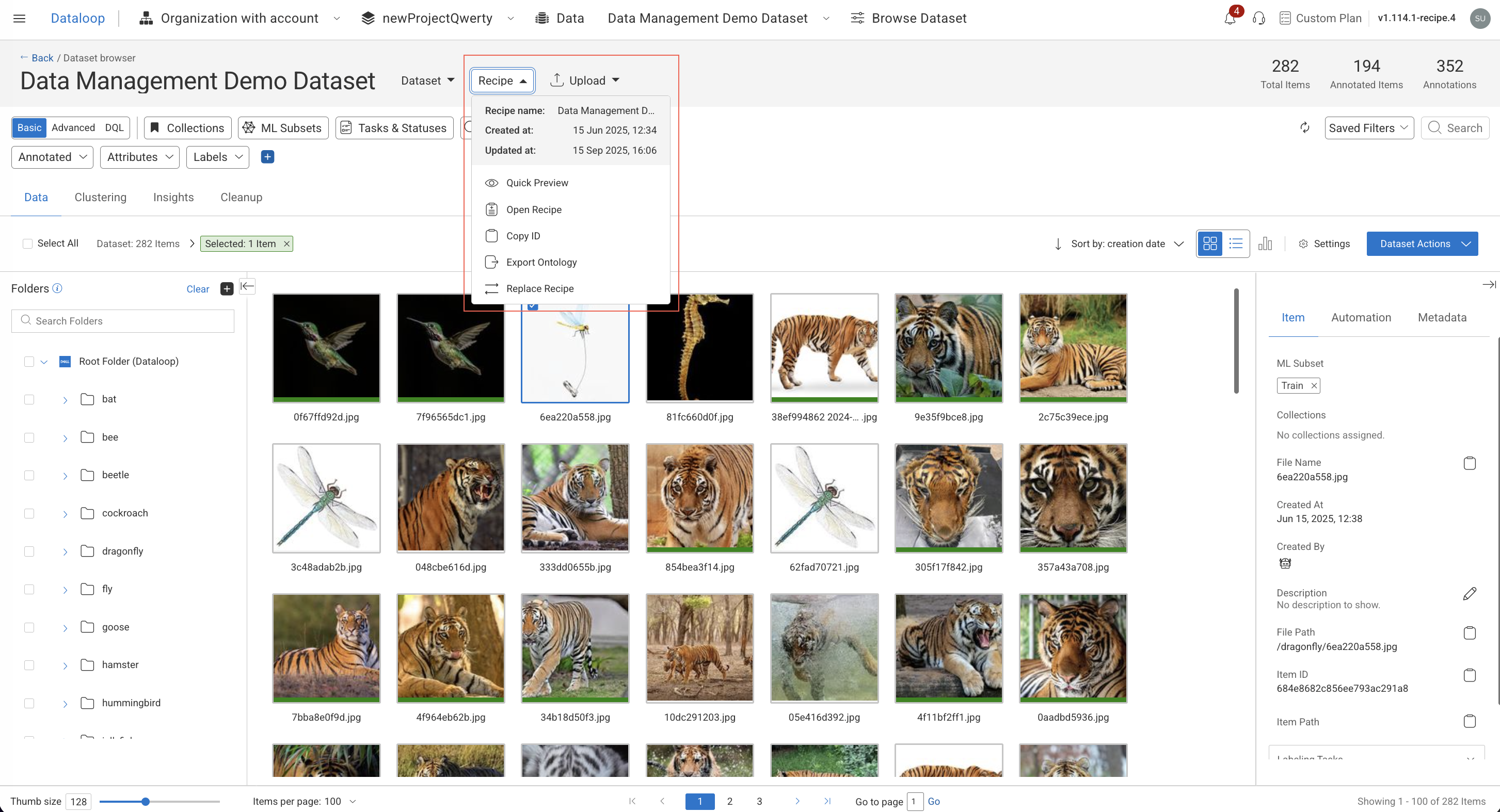

From the Dataset Browser:

Open the Dataset Browser.

In the right-side panel of Dataset Details, click on the recipe link from the Recipe field.

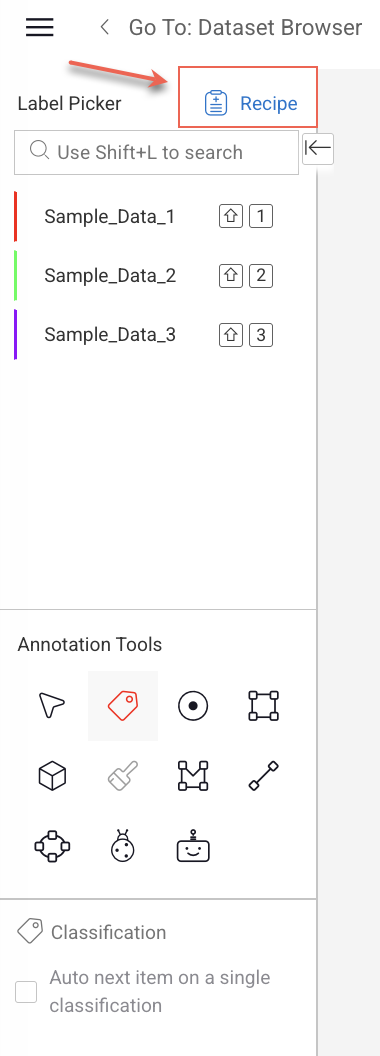

From Annotation Studios:

Click on the Recipe link above the label-picker section.

Permission

Only Annotation Manager or above can access from Annotation Studios.

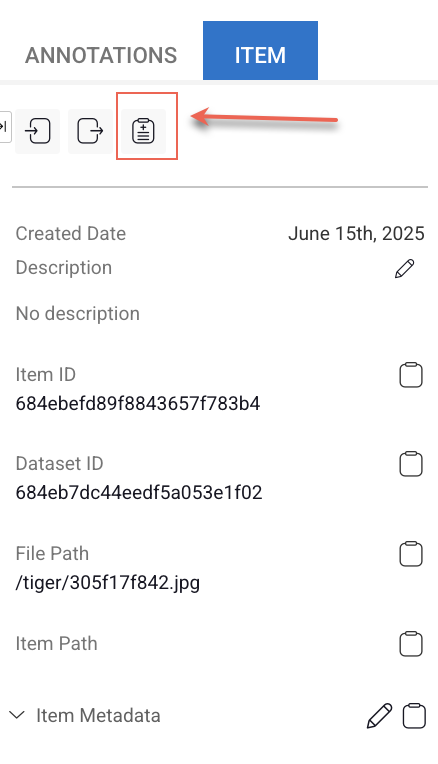

Or, select the Item tab from the right-side panel.

Click on the Recipe icon in the Item-Info tab,

View Preview of a Recipe

Click on the Recipes from the lift-side menu.

Locate or search for the desired recipe in the list.

Select the recipe. A preview of the selected recipe will appear in the right-side panel, displaying its labels and attributes.

This page helps you to edit, export ontology, clone recipes, etc. used across your datasets and annotation projects.

Manage Your Recipes

Edit Recipes

Click on the Recipes from the lift-side menu.

Locate or search for the desired recipe in the list.

Click on the ⋮ Three Dots and select Edit Recipe from the list.

Make required changes and click Save.

Copy Recipe ID

Click on the Recipes from the lift-side menu.

Locate or search for the desired recipe in the list.

Click on the ⋮ Three Dots and select Copy Recipe ID from the list. The recipe ID will be copied.

Delete Recipes

Click on the Recipes from the lift-side menu.

Locate or search for the desired recipe in the list.

Click on the ⋮ Three Dots and select Delete Recipe from the list.

Click Delete to confirm. Deleting a recipe from a dataset will also remove it from any other datasets where it has been set as the active recipe.

Switch (or Change) the Recipe of a Dataset

To change the recipe linked from one dataset to another:

Click on the Data from the lift-side menu.

Locate or search for the desired dataset in the list.

Click on the 3-dots action button of a dataset entry (from either the project overview or the Datasets page).

Select Switch Recipe.

Select a different recipe from the list and approve.

Updating the Ontology with a Label Thumbnail Using the SDK

The following code snippets demonstrate how to add a new label with a thumbnail and how to update an existing label to include thumbnail display data using the Dataloop SDK.

Adding a Label with a Thumbnail

import dtlpy as dl

dataset = dl.datasets.get(dataset_id="65f82c9fc26fd1bc97ea9915")

ontology = dataset.ontologies.list()[0]

ontology.add_label(label_name='dog', color=(34, 6, 231), icon_path=r"C:\Datasets\Dogs\dog.webp")Updating an Existing Label with a Thumbnail

import dtlpy as dl

dataset = dl.datasets.get(dataset_id="65f82c9fc26fd1bc97ea9915")

ontology = dataset.ontologies.list()[0]

# Add label if not already existing

ontology.add_label(label_name='dog', color=(34, 6, 231), icon_path=r"C:\Datasets\Dogs\dog.webp")

# Create a label object with display data

label = dl.Label(tag='dog',

display_label='dog',

display_data={'displayImage': {'itemId': '65fa86e310864a269c607064',

'datasetId': "65f82c9fc26fd1bc97ea9915"}})

# Update the label in the ontology

ontology.update_labels(label_list=[label], update_ontology=True)