- 07 Mar 2023

- Print

- DarkLight

- PDF

Platform Release Notes

- Updated On 07 Mar 2023

- Print

- DarkLight

- PDF

September 29th, 2022

CSV Uploader

Dataloop CSV Uploader tool has improved and now also enables to:

- Add item description by updating the description root property of the item

- Update the item's metadata

Click here to read more about these new abilities.

Preemptible instances

The default "Preemptible instances" for new FaaS applications in now "On" be default (under the app comuting settings).

It can be changed back to regular instances at any time. To read more about preempritble instances and managing app config settings, please read here.

Sep 20th, 2022

Contributors Widget In Project Overview

As we're continously increase the options and features for managing project-contributors, the contributors-widget in project-overview can no longer be directly used to add contributors. Clicking on 'Add Contributors' will now take you to the new page where you can immediately start adding contributors.

Sep 12th, 2022

Polygon Edge Sharing

The Polygon tool now lets you interactively create a polygon that shares its edges with other Polygons, so there will be no overlap between them.

To enable this option:

Click on the Polygon tool > select "Edge Sharing"

Once you finish drawing a polygon that overlaps with other polygons, it will be updated automatically to share the edges of the overlapped polygon.

Reminder: You can draw polygon lines starting inside other polygons by holding the Shift key down.

Aug 25th, 2022

FaaS and Pipeline Triggers

The following triggers have been added both to FaaS and Pipeline:

Item: clone (item.clone) -

By cloning an item, a trigger is sent with the newly created item as an input.Item: Assigned to Task (itemStatus.TaskAssigned) -

When an item is assigned to a Dataloop task, a trigger is sent with the item as an input.Task: Status Changed (Task.statusChanged) -

When changing task status to 'completed', a trigger is sent with task as an input.Assignment: Status Changed (Assignment.statusChanged) -

When changing assignment status to ‘done’, a trigger is sent with assignment as an input.

All new triggers can be used at both execution modes ‘once’ & ‘always’, except for ‘item:clone’ trigger, which can occur only once per each clone (when item is created).

Additional information regarding FAAS can be found here and regarding pipeline can be found here.

Aug 8th, 2022

Video Annotation

We’ve improved the process of changing video annotations (labels, attributes) to enabled faster and more accurate work, especially when correcting models pre-annotations. From now on, when performing such an update, select one of the following duration options:

- Next key frame

- Specific frames - set the requested range of frames

- End of annotation

Additional information is provided here.

Old Analytics

Old dataset and task analytics will be deprecated from this release on.

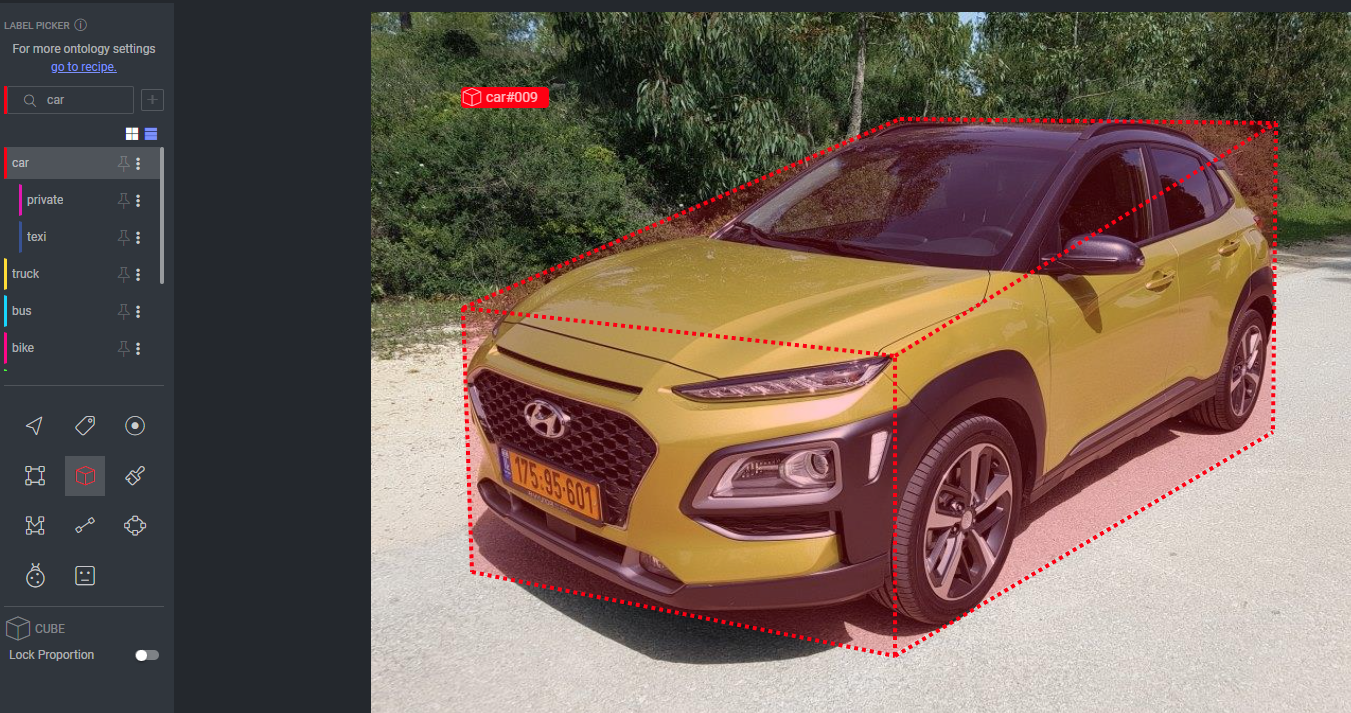

Cuboid Edge Points

This release enables to use the 8 edge points of the cuboid (4 on the front and 4 on the back) to adjust it accordig to orientation of the annotated object.

Additional information is provided here.

An example is displayed below.

Item & Annotation Description Migration

From this release, Item.description is available as root property of the item and the annotation description becomes a root property of the annotation (Annotation.description). Note that it is removed from the annotation system metadata.

This update affects the following:

- Migration - All existing "item description" are transferred to the root property (item.description), and do not appear in the annotations JSON or in the items' system metadata.

The same applies to Annotation.description. - SDK - you must upgrade to the latest SDK version to be able to create or update items/annotations description via SDK. Using older versions for such actions, won't be available (you may receive an error).

- Dataset browser filter - To filter out items with a description, you have to use the new filters "Described"/"Not-described" under the "Item status" section.

item.description won't be counted under the "Annotated"/"Not Annotated" filters any longer. - Image Captioning - In order to use item description for labeling purposes, we recommend using the classification tool and adding a description (note) over the annotation.

July 26th, 2022

Private container registry

This release enables to connect your docker to Dataloop using either ECR (AWS) or GCR (GCP) private docker registries.

- Create an Organization-Integration of 'private container registry' type

- Specify the docker image details in the FaaS application-configuration

Additional information is provided here

Audio Studio

This release enables additional operations when audio studio is used as explained below.

Audio Binding

This option can be toggled from the control bar by using the slider (on/off modes).

ON mode (by default) - while playing, selecting an annotation from either the audio-signal or the annotation list will set the playback position on the beginning of this annotation

OFF mode - causes this binding to be removed, and moving between annotations either from the audio-wave or from annotation list will not change the audio play position

Keyboard shortcuts

Use this menu to view relevant shortcuts;

| Shortcut | Operation |

|---|---|

| / | toggle audio binding |

| [ / ] | move down/up one play speed |

| L | Loop playback |

| </> | move to left/right annotation respectively |

| 5s/-10s/-20s/+s/+10s/+20s | jump back/forward X seconds |

Speed change

Click on the current speed and select the relevant one from the drop-down list (0.5, 0.75, 1, 1.25, 1.5 and 2). Default option is X1.

Loop playback

This button enables to loop a section of an audio item within the boundaries of the current selection range.

Loopback mode -

a) If an annotation is selected, it is played in loop until either paused or it reaches the existing “loop playback” mode

b) If no annotation is selected, the audio will loopback when it reaches the end

July 11th, 2022

Project Issues

The issues list has been updated, and includes the following modes of details:

- My Issues - Issues on annotations created by current user, that are pending correction

- Project issues - all issues created by contributors in the project,

Analytics - Annotations By Tool

The annotations table includes an additional tab - ‘By Tools’ (see below), detailing the annotations-tools used to create annotations (e.g. Polygon, Box etc).

FaaS Service Page - Analytics

A new page has been added to the platform displaying metrics for running services, allowing monitoring, control and debugging of your service. These metrics include Queue size, Number of replicates, Execution duration. Successful executions, Failed executions, and Executions by functions

WEBM Convertor Changes

Dataloop's WEBM converter is now running as a Project installed service, and not as a global service (as it is today). The new project-installed WEBM converter service is configured to run on demand only, when creating workflow tasks (Annotations/QA) containing video items.

For more information and details - Read here

June 28th, 2022

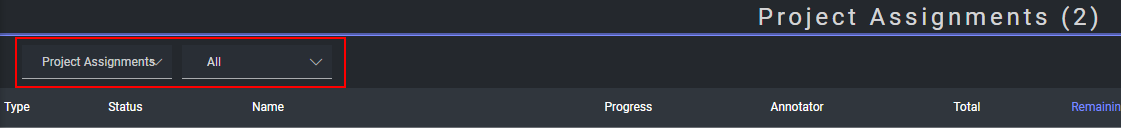

Workflow Management - Project Assignments

Users with project roles of annotation-manager and higher (developer, owner) can see a new option in assignment page - a drop-down allows changing context between "Project assignments", to see all assignment in the project, and "My Assignments" which works the same way this page used to always work, showing only the user's assignments.

Combined with a new "Assignee" filter, the Project-Assignments mode allows you to monitor workload and overall progress of specific users.

FAAS

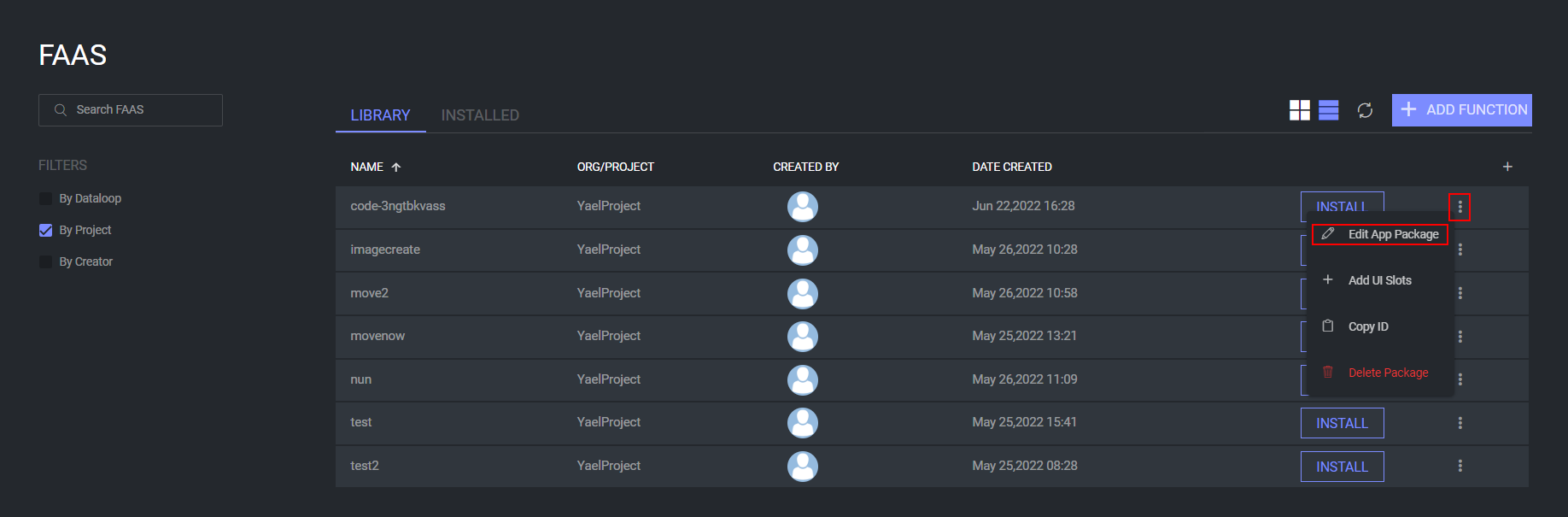

Edit and Update Package

The platform now offers editing and updating of a requested package as follows:

Click on Edit app package option (see below)

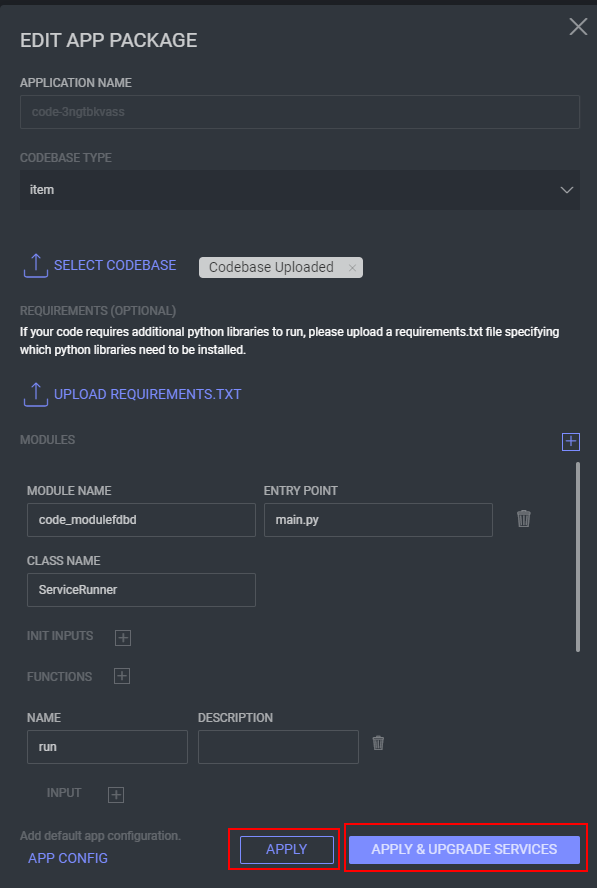

Consequently, Edit app package window is displayed (see below)

An application name can’t be modified once it has installed services. Therefore, if this is relevant, the application name field will be disabled.

Choose the matching apply button:

a) Apply - only to the package

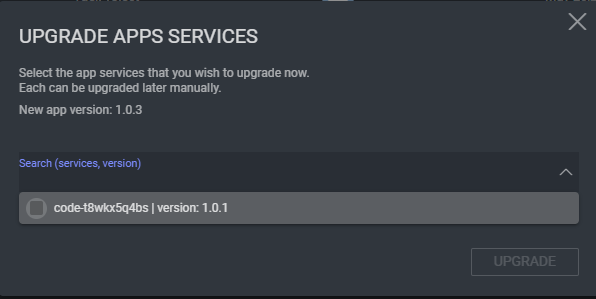

b) Apply & update services - clicking on this button causes Upgrade app services window to be displayed (see below). Here, it is possible to select the relevant services from the drop-down list.

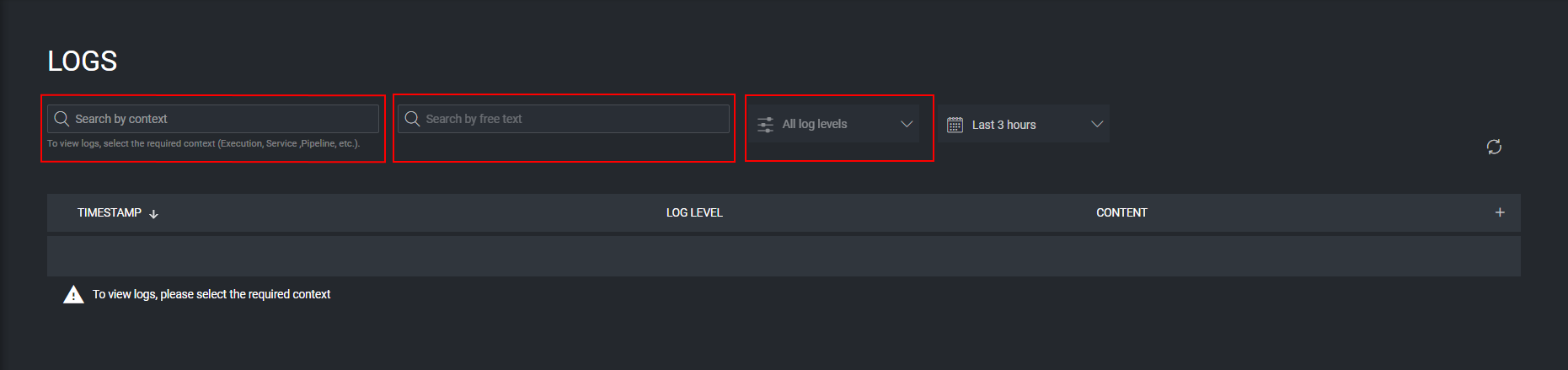

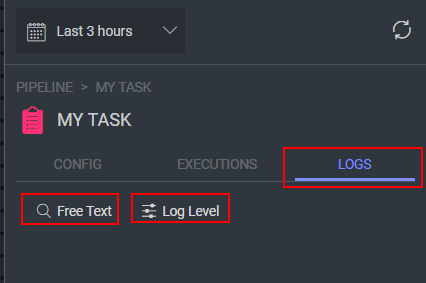

Log filter additions

To improve work with logs data, the log filtering has been significantly improved

The updated filters include the following:

Filter by context - relates to execution, service and pipeline cases.

Filter by free text - smart search node enabling to search more than one phrase.

Filter by log level - refers to log severity and relates to debug, info, warning, error, and critical cases.

Note that context filter must be used before using free text and log level filters. Otherwise, free text and log level filters are disabled.

Pipeline

Log Filter additions

To improve logs review in pipelines, filtering options has been enhanced.

The updated filters include the following:

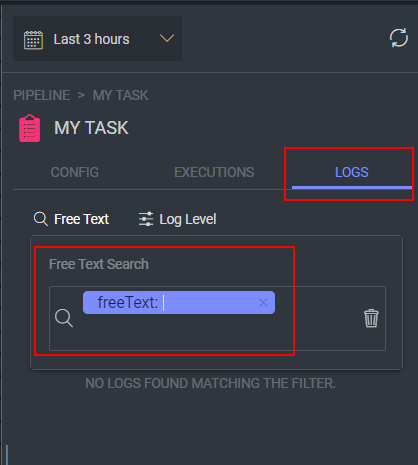

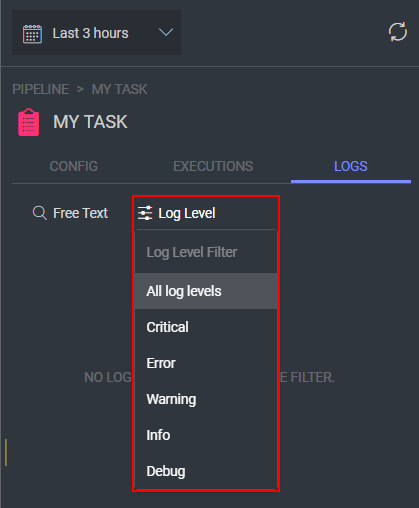

Filter by free text - smart search node enabling to search more than one word.

Filter by log level - refers to log severity and relates to debug, info, warning, error and critical cases.

Note the following:

- In order to use the free text filter, click on the free text box and select the freeText option (see below) and type the relevant text near it.

- In order to use the log level filter, select the requested one from the drop down list. Once a log level is selected, the displayed results will include its minimum level.

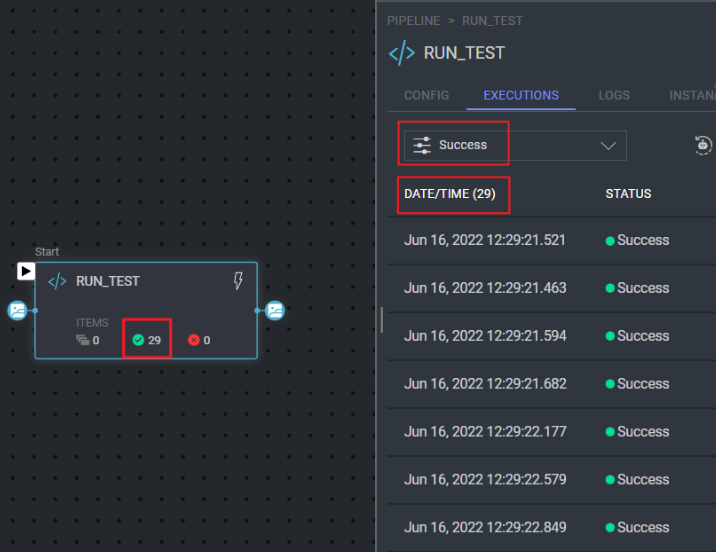

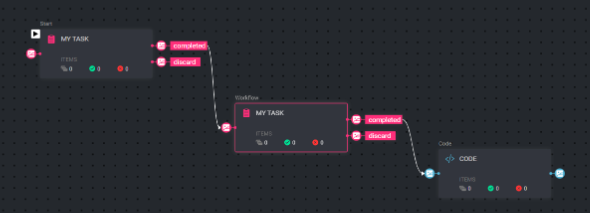

Node counters

As of this release, the node counters will reflect the executions run over the node, rather than counting the number of run items. The counters are according to the following, left to right:

- Running executions or executions awaiting in the queue

- Successful executions

- Failed executions

The change in counters might be noticeable when running over a list of items (item[]). In some compositions, the counter may reflect now 1 execution, instead of N items executed in a given list.

For example: The screenshot below demonstrates 29 successful executions over a code node (“RUN_TEST”), visible both on the node counters and on the filtered node execution list on the right panel.

June 14th, 2022

Audio studio

Dataloop audio studio has been renovated to be in-par with latest image/video annotation-studio features, including attribute support, new label selection and more. For the complete audio studio documentation, read here

NLP studio

The NLP studio now supports eml file format for email content. For more information, read here

New-Dataset

The popup for creating a new dataset has been redesigned, and is now in the new Dataloop UI format.

In addition, when creating a new Dataset it is now possible to directly add a new integration (and not just a new storage driver), allowing continuous work from a single place and not navigating between different screens.

Instructions PDF From Task-Browser

PDF instructions button is now available in the top toolbar in task and assignment browser. Annotators can now access the task instructions when working from the browser, and not just from the annotation-studio (for example when working with bulk-classification).

Pipeline

Code Editor

Enable opening code editor while in View mode

Users won’t be able to edit the code, only view it

Changes can’t be performed in view mode

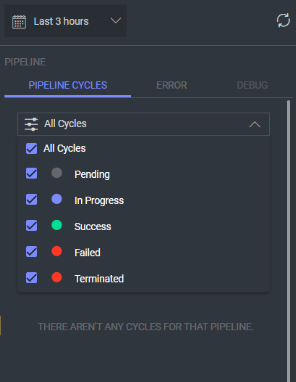

Cycle Filter

It is possible to filter the pipeline cycles list (‘Pipeline cycle’ tab as displayed below). It is possible to filter the relevant cycle as per the displayed list. The filter should be according to the input parameter of the pipeline (if it is an item the filter is an item, if it is a task the filter is a task).

New Status for Pipeline Cycles

Addition of a new status - “Pending”, which refers to queued items awaiting processing.

Note that this status is displayed on the right and not reflected on the status bar. ‘Pending’ status related to the information on the status bar will be displayed as ‘Start’ status.

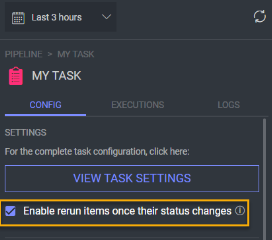

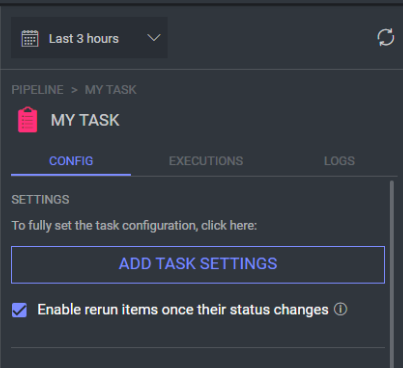

Pipeline Task Node - Rerun

A checkbox has been added to the task node “config” tab (see below) to enable re-sending items as the task node outputs when their status becomes updated. As a result, the subsequent pipeline nodes may be re-executed with the same items.

This option is enabled by default and will be updated automatically to all existing pipelines.

Example:

If there are two tasks to complete; the first task has an issue and something has changed in its status, the process won’t go back. Every change in status from the task is a kind of trigger and will cause its running to reoccur.

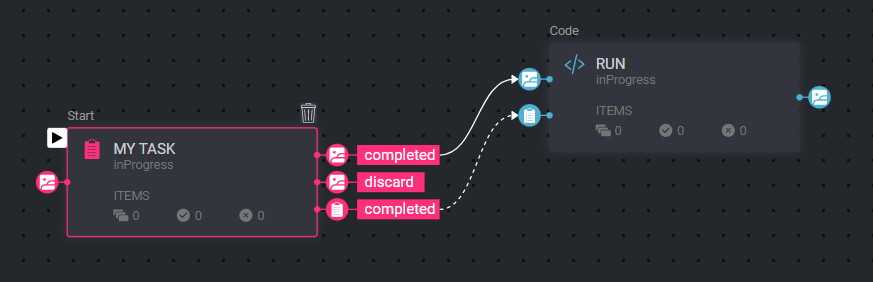

For example, if there are two annotation tasks (pipeline structure as displayed below) -

- Annotation of a cat

- Annotation of a dog

The annotator didn’t correctly annotate the ‘dog’ and the QA opened an issue on it. As a result, this item’s status will be set as ‘In progress’ in the pipeline and will be sent back to the annotator. Only after the second task has been completed, the item’s status will become ‘Completed’. This applies also to updating the status by the annotator.

The code in the pipeline below will be executed only once even if the item has been completed.

Task Node Configuration

The task node settings are now in the same format as in the standard workflow. Click on “ADD TASK SETTINGS” to open the task details dialogue box.

To set/edit the task statuses (completed, discard, etc.) use the dialogue box under “instructions” section. Statuses will reflect as node outputs on the pipeline canvas. Note that the statuses can no longer be defined from the node’s outputs section. For additional information, read here.

May 24th, 2022

Additional IP Address

As part of our maintenance, on May 24, 2022 we assigned new dedicated IP addresses to our servers.

If you are whitelisting Dataloop IPs, you should update your whitelist (lists for firewalls that only allow specified IP addresses to perform certain tasks or connect to your system) to ensure your services and API integrations continue to run. The full relevant IP addresses are:

34.76.183.75

104.199.5.216

35.241.168.191

35.240.12.251

Pulling Task Type

Task creation now has the option to use "Pulling" allocation method, where items in the task are queued and delivered to assignees in small batches. Batch size and max number of items for any assignee are configurable.

For more information, read here.

New Audio Studio

Dataloop legacy Audio annotation studio, for audio classification and audio trnascription, has been upgraded. In addition to UI redesign, the Audio studio is now compatible with latest attributes structure, and incldues all the annotation work controls like the Image-annotation and Video-annotation has.

Pipeline Filters - Supported Entities

DQL filters used with Pipelines can now use any Dataloop entity type - Project, Dataset, Execution, Service, Task, Assignment etc.

March 20th, 2022

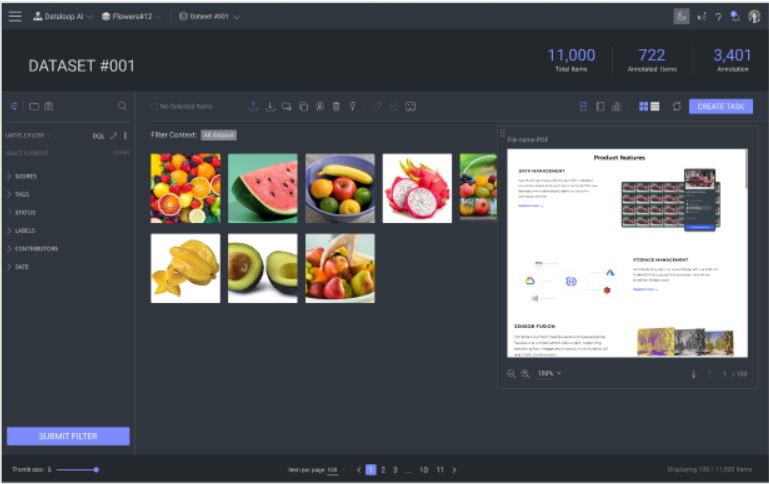

Create Task

"Create Task" has been updated with additional features added to the task creation process (e.g., selecting a recipe, adding actions to tasks, and more) and is available in the Tasks page as well as in the Dataset Browser.

For more information, read about creating an annotation task, creating a QA task, and reassigning and redistributing tasks.

Crop Out Box Annotations – Pipeline Node

The Crop Out Box Annotations node takes in items and creates a new item for every box annotation.

For more information read about Crop Out Box Annotations.

February 28th, 2022

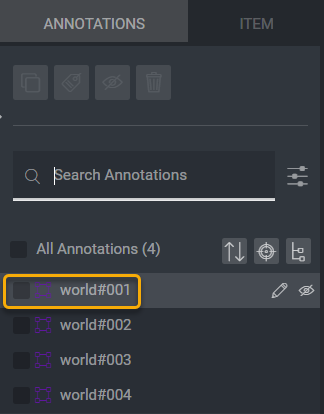

Searching Annotations by Part of Their Name

Annotations can be searched by their full name or a part of their name. For example, searching for “001” in the annotation list below will return the annotation "world#001."

Pipeline – Making a Code or FaaS Component Wait for a Task or Assignment to Be Completed before Running

To set a Code or FaaS component to run only when a specific task or assignment that is connected to that Code/FaaS component is completed, follow these steps:

Create an output in the Task component of type task or assignment and create an input in the Code or FaaS component of type task or assignment, accordingly.

Connect the Task component output to the Code/FaaS component input (the connection will appear as a dotted line). Now the Code/FaaS component will not run until the task/assignment is completed.

The dotted line connecting the components shows that the task/assignment being completed adds an additional cycle to the pipeline. Now, the number of cycles will be the number of items that go through the pipeline plus one cycle per task/assignment that is completed.

Click here for more information about pipelines.

February 14th, 2022

Filter by Date

A date selector has been added to the filters in the Dataset Browser and Task Browser, allowing you to add a date range to your filtering by status.

To apply a date filter, follow these steps:

- Select the status/statuses you wish to filter by.

- Click the date filter field and a calendar will open.

- Click on a date to select the first day and last day of the date range you wish to filter by. The date range you selected should be highlighted in the calendar.

- Click ESC to close the calendar. The date range should appear in the date field.

- Once you are ready to apply the filter, click the filter icon

.

.

For example, you may filter all Annotated items, which have been given a status Completed between the dates Feb 2nd-Feb 8th.

For more information on data filtering click here.

January 30th, 2022

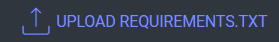

Specifying Library Requirements when Pushing Code Packages in FaaS

Added Upload Requirements.txt option through the UI to Add Function on FaaS. If your FaaS code requires additional python libraries to run, you may upload a requirements.txt file listing those libraries:

numpy==1.18.1

opencv-python==3.4.2.17

For more information on pushing a package, see Faas User-Interface.

Dataloop Pipeline Update

Dataloop’s Pipeline has been updated with new features for tracking the cycles and execution of pipeline components and the pipeline as a whole, providing the ability to better monitor and control pipelines.

For complete information, see our documentation:

- Pipeline Overview

- Project Pipeline Page

- Creating Pipelines

- Composing Pipelines

- Pipeline Components

- Getting Data into the Pipeline

- Running and Monitoring Pipelines

## January 23rd, 2022

### Using Dataloop WebM Files with Video Annotations

Dataloop converts uploaded video files to WEBM-VP8 format to enable frame-accurate annotation.

However, as annotations are only accurate with respect to the WebM file, and NOT to the original file uploaded, you must download the WebM file and use it for model training, or any other use outside the Dataloop platform.

WebM Item ID and streaming URL can be found in the original video JSON file.

Click here for more information regarding these changes.

January 11th, 2022

Item Status per Task/Assignment

As of this release, an item's status is defined per task/assignment in which the item received the status – an item can be in different tasks and in each task have a different status.

As a result, the metadata of the item now includes an array of statuses in different tasks, and the item status can only be set in the UI from the Task Browser (not from the Dataset Browser).

Read more about the item status per task/assignment in the following articles: