- 20 Jan 2025

- Print

- DarkLight

- PDF

Overview

- Updated On 20 Jan 2025

- Print

- DarkLight

- PDF

Dataloop is an enterprise-grade data engine for AI/ML project lifecycle management with unstructured data (Computer-Vision and NLP) from development to production at scale. As a knowledge automation company, Dataloop AI Model learning cycle or data-loop enables to:

|

The core platform modules, such as Data-Management, Workforce-Management, Taxonomy-Management, Compute Management, and Model Management are the building blocks for:

Data Pipelines: to automate and streamline data processing with human-in-the-loop at scale.

Data applications: to manually Labels and process data at high quality and lower costs.

Custom applications: allowing developers to build project-specific solutions, either new or forked from Dataloop applications, driving the highest project efficiency.

.png)

Data

Management

Enterprise-grade performances for unstructured data management and versioning

Perform sub-second queries on millions of files by Item attributes, item Metadata , or user metadata.

Cloud-native: Ingest and sync from popular cloud storage providers, such as AWS, GCP, Azure, etc.

Performance: Sub-second queries on millions of files by item attributes, item metadata, or user metadata.

Versioning: Data versions accordingly with Model Version .

Privacy: Meet data privacy standards.

Workforce

Management

Work with labeling companies and domain experts while paving the learning process and analyzing every aspect of progress and quality.

Team management: Cross-vendor workforce assembled in groups by domain expertise context.

Analytics: Monitor every aspect of work progress, such as performance, quality, cost control, etc.

Quality management: Quality annotations for your supervised model through consensus and honey-pot-based work.

Learning management: Pave the learning process toward domain expertise.

Automation &

Compute Management

.svg)

Function as a Service (FaaS) - Deploy your codebase in a serverless compute platform to facilitate automation, pre and post processing of unstructured data, and workflow customization.

Developer first: Extensive examples and docs, debugging tools, logs, monitoring tools, and alerts.

Agility: Connect your code from Git, upload a package, or use our code editor.

Scale ready: From debugging on XS machines to production-ready auto scalers.

Model

Management

Manage your models' lifecycle and ongoing development, alongside your data.

Continual learning pipeline: Easily set up and run for a winning AI model.

ML-Ops: Connects your Model architecture (if pre-trained - with weight files) through Git to use it with your services (FaaS) and pipelines.

Efficient training: Optimize training and model performances with optimized, feature-based, and data selection.

Data Apps /

Annotation Studios

Build or bring your annotation studios, or use Dataloop’s studios for Image, Video, Audio, and NLP.

Efficiency: Tuned for performance and quality. Our data applications enable increased throughput without compromising on quality.

Labeling automation: Incorporate models and functions to automate the process, correct model annotations, and enforce annotation rules.

Taxonomy

Management

(Recipe & Ontology)

Represent the information applied to your data with hierarchically structured labels and attributes.

Recipe: Specific work instructions set that includes required labels and attributes, labeling tools, validation rules, and instructions documents.

Ontology automation: Connect your ontology via API.

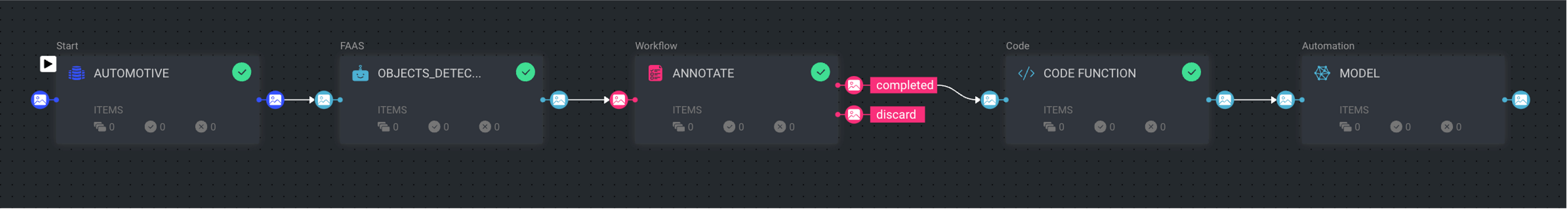

Data Pipelines

Compose pipelines to process data with human-in-the-loop (HITL). Facilitate your business processes and development or project pipelines to achieve any data flow by combining functions, models, and manual annotation work. You can add your custom nodes to the pipelines and with the UI settings you can build & define node functionalities.

.svg)

.svg)

.svg)